Message boards : News : ATM

| Author | Message |

|---|---|

|

Hello GPUGRID! | |

| ID: 60002 | Rating: 0 | rate:

| |

|

I’m brand new to GPUGRID so apologies in advance if I make some mistakes. I’m looking forward to learn from you all and discuss about this app :) | |

| ID: 60003 | Rating: 0 | rate:

| |

|

Welcome! | |

| ID: 60005 | Rating: 0 | rate:

| |

|

Thanks for creating an official topic on these types of tasks. | |

| ID: 60006 | Rating: 0 | rate:

| |

|

Welcome and thanks for info Quico <nbytes>729766132.000000</nbytes> <max_nbytes>10000000000.000000</max_nbytes> https://ibb.co/4pYBfNS parsing upload result response <data_server_reply> <status>0</status> <file_size>0</file_size error code -224 (permanent HTTP error) https://ibb.co/T40gFR9 I will do test new test on new units but would probably face same issue if server have not changed. https://boinc.berkeley.edu/trac/wiki/JobTemplates | |

| ID: 60007 | Rating: 0 | rate:

| |

File size in past history that max allowed have been 700mb Greger, are you sure it was 700mb? From what I remember, it was 500mb | |

| ID: 60009 | Rating: 0 | rate:

| |

|

I have one which is looking a bit poorly. It's 'running' of host 132158l (Linux Mint 21.1, GTX 1660 super, 64 GB RAM), but it's only showing 3% progress after 18 hours. | |

| ID: 60011 | Rating: 0 | rate:

| |

|

I am trying to upload one, but can't get it to do the transfer: | |

| ID: 60012 | Rating: 0 | rate:

| |

|

I think mine is a failure. Nothing has been written to stderr.txt since 14:22:59 UTC yesterday, and the final entries are: + echo 'Run AToM' + CONFIG_FILE=Tyk2_new_2-ejm_49-ejm_50_asyncre.cntl + python bin/rbfe_explicit_sync.py Tyk2_new_2-ejm_49-ejm_50_asyncre.cntl Warning: importing 'simtk.openmm' is deprecated. Import 'openmm' instead. I'm aborting it. NB a previous user also failed with a task from the same workunit: 27418556 | |

| ID: 60013 | Rating: 0 | rate:

| |

|

Thanks everyone for the replies! Welcome and thanks for info Quico Thanks for this, I'll keep that in mind. From the succesful run the size file is 498M so it should be on the limit there to what @Erich56 says. But that's useful information for when I run bigger systems. I think mine is a failure. Nothing has been written to stderr.txt since 14:22:59 UTC yesterday, and the final entries are: Hmmm, that's weird. It shouldn't softlock in that step. Although this warning pops up it should keep running without issues. I'll ask around | |

| ID: 60022 | Rating: 0 | rate:

| |

|

This task didn't want to upload, but neither would GPUGrid update when I aborted the upload. | |

| ID: 60029 | Rating: 0 | rate:

| |

|

I just aborted 1 ATM Wu https://www.gpugrid.net/result.php?resultid=33338739 that had been running for over 7 Days, it sat at 75% done the whole time. Got another one & it immediately jumped to 75% done. Probably just abort it & deselect any new ATM Wu's ... | |

| ID: 60035 | Rating: 0 | rate:

| |

|

Some still running, many failing. | |

| ID: 60036 | Rating: 0 | rate:

| |

|

Three successive errors on host 132158 | |

| ID: 60037 | Rating: 0 | rate:

| |

|

I let some computers run off all other WUs so they were just running 2 ATM WUs. It appears they do only use one CPU each but that may just be a consequence of specifying a single CPU in the client_state.xml file. Might your ATM project benefit from using multiple CPUs? <app_version> nvidia-smi reports ATM 1.13 WUs are using 550 to 568 MB of VRAM so call it 0.6 GB VRAM. BOINCtasks reports all WUs are using less than 1.2 GB RAM. That means that my computers could easily run up to 20 ATM WUs simultaneourly. Sadly GPUGRID does not allow us to control the number of WUs we DL like LHC or WCG do. So we're stuck with 2 set by the ACEMD project. I never run more than a single PYTHON WU on a computer so I get two and abort one and then have to uncheck PYTHON in my GPUGRID Preferences just in case ACEMD or ATM WUs materialize. I wonder how many years it's been since GG has improved the UI to make it more user-friendly? When one clicks their Preferences they still get 2 Warnings and 2 Strict Standards that have never been fixed.<app_name>ATM</app_name> <version_num>113</version_num> <platform>x86_64-pc-linux-gnu</platform> <avg_ncpus>1.000000</avg_ncpus> <flops>46211986880283.171875</flops> <plan_class>cuda1121</plan_class> <api_version>7.7.0</api_version> Please add a link to your applications: https://www.gpugrid.net/apps.php ____________  | |

| ID: 60038 | Rating: 0 | rate:

| |

|

Is there a way to tell if an ATM WU is progressing? I have had only one succeed so far over the last several weeks. However, all of the failures so far were one of two types: either a failure to upload (and the download aborted by me) or a simple "Error while computing", which happened very quickly. | |

| ID: 60039 | Rating: 0 | rate:

| |

|

let me explain something about the 75% since it seems many don't understand what's happening here. the 75% is in no way an indication of how much the task has progressed. it is totally a function of how BOINC acts with the wrapper when the tasks are setup in the way that they are. | |

| ID: 60041 | Rating: 0 | rate:

| |

|

I have one that's running (?) much the same. I think I've found a way to confirm it's still alive. 2023-03-08 21:55:05 - INFO - sync_re - Started: sample 107, replica 12 2023-03-08 21:55:17 - INFO - sync_re - Finished: sample 107, replica 12 (duration: 12.440164870815352 s) which seems to suggest that all is well. Perhaps Quico could let us know how many samples to expect in the current batch? | |

| ID: 60042 | Rating: 0 | rate:

| |

|

Thanks for the idea. Sure enough, that file is showing activity (On sample 324, replica 3 for me.) OK. Just going to sit and wait. | |

| ID: 60043 | Rating: 0 | rate:

| |

I have one that's running (?) much the same. I think I've found a way to confirm it's still alive. Thanks for this input (and everyone's). At least in the runs I sent recently we are expecting 341 samples. I've seen that there were many crashes in the last batch of jobs I sent. I'll check if there were some issues on my end or it's just that the systems decided to blow up. | |

| ID: 60045 | Rating: 0 | rate:

| |

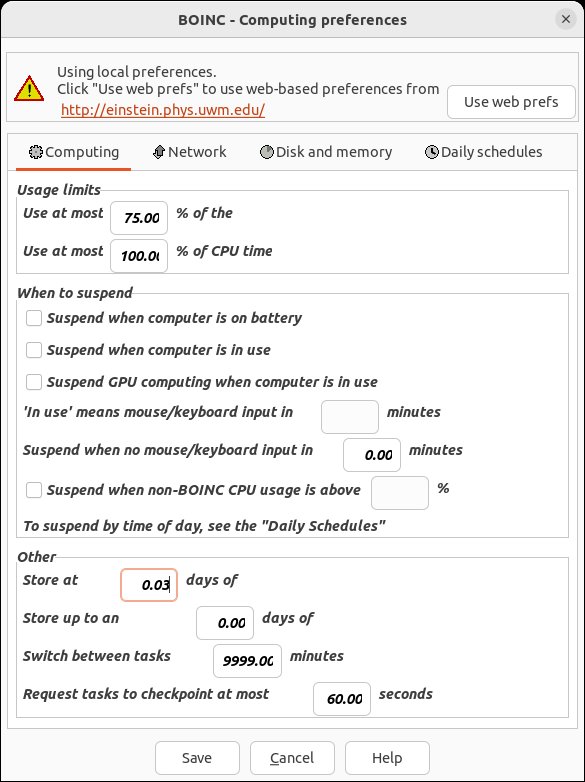

At least in the runs I sent recently we are expecting 341 samples. Thanks, that's helpful. I've reached sample 266, so I'll be able to predict when it's likely to finish. But I think you need to reconsider some design decisions. The current task properties (from BOINC Manager) are:  This task will take over 24 hours to run on my GTX 1660 Ti - that's long, even by GPUGrid standards. BOINC doesn't think it's checkpointed since the beginning, even though checkpoints are listed at the end of each sample in the job.log BOINC Manager shows that the fraction done is 75.000% - and has displayed that figure, unchanging, since a few minutes into the run. I'm not seeing any sign of an output file (or I haven't found it yet!), although it's specified in the <result> XML: <file_ref> <file_name>T_QUICO_Tyk2_new_2_ejm_47_ejm_55_4-QUICO_TEST_ATM-0-1-RND8906_2_0</file_name> <open_name>output.tar.bz2</open_name> <copy_file/> </file_ref> More when it finishes. | |

| ID: 60046 | Rating: 0 | rate:

| |

At least in the runs I sent recently we are expecting 341 samples. That's good to know, thanks. Next time I'll prepare them so they run for shorter amounts of time and finish over next submissions. Is there an aprox time you suggest per task? I'm not seeing any sign of an output file (or I haven't found it yet!), although it's specified in the <result> XML: Can you see a cntxt_0 folder or several r0-r21 folders? These should be some of the outputs that the run generates, and also the ones I'm getting from the succesful runs. | |

| ID: 60047 | Rating: 0 | rate:

| |

Can you see a cntxt_0 folder or several r0-r21 folders? These should be some of the outputs that the run generates, and also the ones I'm getting from the succesful runs. Yes, I have all of those, and they're filling up nicely. I want to catch the final upload archive, and check it for size. | |

| ID: 60048 | Rating: 0 | rate:

| |

Can you see a cntxt_0 folder or several r0-r21 folders? These should be some of the outputs that the run generates, and also the ones I'm getting from the succesful runs. Ah I see, from what I've seen the final upload archive has been around 500MB for these runs. Taking into accont what was mentioned filesize-wise in the beginning of the thread I'll tweak some paramaters in order to avoid heavier files | |

| ID: 60049 | Rating: 0 | rate:

| |

|

you should also add weights to the <tasks> element in the jobs.xml file that's being used as well as adding some kind of progress reporting for the main script. jumping to 75% at the start and staying there for 12-24hrs until it jumps to 100% at the end is counterintuitive for most users and causes confusion about if the task is doing anything or not. | |

| ID: 60050 | Rating: 0 | rate:

| |

Next time I'll prepare them so they run for shorter amounts of time and finish over next submissions. Is there an aprox time you suggest per task? The sweet spot would be 0.5 to 4 hours. Above 8 hours is starting to drag. Some climate projects take over a week to run. It really depends on your needs, we're here to serve :-) It seems a quicker turn around time while you're tweaking your project would be to your benefit. It seems it would help you if you created your own BOINC account and ran your WUs the same way we do. Get in the trenches with us and see what we see. | |

| ID: 60051 | Rating: 0 | rate:

| |

|

Well, here it is: | |

| ID: 60052 | Rating: 0 | rate:

| |

Next time I'll prepare them so they run for shorter amounts of time and finish over next submissions. Is there an aprox time you suggest per task? Once the Windows version is live my personal set-up will join the cause and will have more feedback :) Well, here it is: Thanks, for the insight. I'll make it save frames less frequently in order to avoid bigger filesizes. | |

| ID: 60053 | Rating: 0 | rate:

| |

|

nothing but errors from the current ATM batch. run.sh is missing or misnamed/misreferenced. | |

| ID: 60068 | Rating: 0 | rate:

| |

|

I vaguely recall GG had a rule something like a computer can only DL 200 WUs a day. If it's still in place it would be absurd since the overriding rule is that a computer can only hold 2 WUs at a time. | |

| ID: 60069 | Rating: 0 | rate:

| |

|

Today's tasks are running OK - the run.sh script problem has been cured. | |

| ID: 60074 | Rating: 0 | rate:

| |

|

i wouldnt say "cured". but newer tasks seem to be fine. I'm still getting a good number of resends with the same problem. i guess they'll make their way through the meat grinder before defaulting out. | |

| ID: 60075 | Rating: 0 | rate:

| |

|

My point was: if you get one of these, let it run - it may be going to produce useful science. If it's one of the faulty ones, you waste about 20 seconds, and move on. | |

| ID: 60076 | Rating: 0 | rate:

| |

|

Quico/GDF, GPU utilization is low so I'd like to test running 3 and 4 ATM WUs simultaneously. | |

| ID: 60084 | Rating: 0 | rate:

| |

|

Sorry about the run.sh missing issue of the past few days. It slipped through me. Also they were a few re-send tests that also crashed, but it should be fixed now. | |

| ID: 60085 | Rating: 0 | rate:

| |

Quico/GDF, GPU utilization is low so I'd like to test running 3 and 4 ATM WUs simultaneously. How low is it? It really shouldn't be the case at least taking into account the tests we performed internally. | |

| ID: 60086 | Rating: 0 | rate:

| |

|

My host 508381 (GTX 1660 Ti) has finished a couple overnight, in about 9 hours. The last one finished just as I was reading your message, and I saw the upload size - 114 MB. Another failed with 'Energy is NaN', but that's another question. | |

| ID: 60087 | Rating: 0 | rate:

| |

|

My observations show the GPU switching from periods of high utilization (~96-98%) to periods of idle (0%). About every minute or two. | |

| ID: 60091 | Rating: 0 | rate:

| |

Quico/GDF, GPU utilization is low so I'd like to test running 3 and 4 ATM WUs simultaneously.How low is it? It really shouldn't be the case at least taking into account the tests we performed internally. GPUgrid is set to only DL 2 WUs per computer. It used to be higher but since ACEMD WUs take around 12ish hours and have approxiamtely 50% GPU utilization a normal BOINC client couldn't really make efficient use of more than 2. The history of setting the limit may have had something to do with DDOS attacks and throttling server access as a defense. But Python WUs with a very low GPU utilization and ATM with about 25% utilization could run more. I believe it's possible for the work server to designate how many WUs of a given kind based on the client's hardware. Some use a custom BOINC client that tricks the server into thinking their computer is more than one computer. I suspect 1080s & 2080s could run 3 and 3080s could run 4 ATM WUs. Be nice to give it a try. Checkpointing should be high on your To-Do List followed closely by progress reporting. File size is not an issue on the client side since you DL files over a GB. But increasing the limit on your server side would make that problem vanish. Run times have shortened and run fine, maybe a little shorter would be nice but not a priority. | |

| ID: 60093 | Rating: 0 | rate:

| |

|

I noticed Free energy calculations of protein ligand binding in WUProp. For example, today's time is 0.03 hours. I checked, and i've 68 of these with a total of minimal time. So i checked, and they all get "Error while computing". I looked at a recent work unit, 27429650 T_CDK2_new_2_edit_1oiu_26_T2_2A_1-QUICO_TEST_ATM-0-1-RND4575_0 | |

| ID: 60094 | Rating: 0 | rate:

| |

GPUgrid is set to only DL 2 WUs per computer. it's actually 2 per GPU, for up to 8 GPUs. 16 per computer/host. ACEMD WUs take around 12ish hours and have approxiamtely 50% GPU utilization acemd3 has always used nearly 100% utilization with a single task on every GPU I've ever run. if you're only seeing 50%, sounds like you're hitting some other kind of bottleneck preventing the GPU from working to its full potential. ____________  | |

| ID: 60095 | Rating: 0 | rate:

| |

|

I just started using nvitop for Linux and it gives a very different image of GPU utilization while running ATM: https://github.com/XuehaiPan/nvitop | |

| ID: 60096 | Rating: 0 | rate:

| |

|

i would probably give more trust to nvidia's own tools. watch -n 1 nvidia-smi or watch -n 1 nvidia-smi --query-gpu=temperature.gpu,name,pci.bus_id,utilization.gpu,utilization.memory,clocks.current.sm,clocks.current.memory,power.draw,memory.used,pcie.link.gen.current,pcie.link.width.current --format=csv but you said "acemd3" uses 50%. not ATM. overall I'd agree that ATM is closer to 50% effective or a little higher. it cycles between like 90 seconds @95+% and 30 seconds @0% and back and forth for the majority of the run. ____________  | |

| ID: 60097 | Rating: 0 | rate:

| |

I'm running Linux Mint 19 (a bit out of date)I just retired my last Linux Mint 19 computer yesterday and it had been running ATM, ACEMD & Python WUs on a 2080 Ti (12/7.5) fine. BTW, I tried the LM 21.1 upgrade from LM 20.3 and can't do things like open BOINC folder as admin. I can't see any advantage to 21.1 so I'm going to do a fresh install and revert back to 20.3. My machine has a gtx-950, so cuda tasks are OK.Is there a minimum requirement for CUDA and Compute Capability for ATM WUs? https://www.techpowerup.com/gpu-specs/geforce-gtx-950.c2747 says CUDA 5.2 and https://developer.nvidia.com/cuda-gpus says 5.2. | |

| ID: 60098 | Rating: 0 | rate:

| |

Is there a minimum requirement for CUDA and Compute Capability for ATM WUs? very likely the min CC is 5.0 (Maxwell) since Kepler cards seem to be erroring with the message that the card is too old. all cuda 11.x apps are supported by CUDA 11.1+ drivers. with CUDA 11.1, Nvidia introduced forward compatibility of minor versions. so as long as you have 450+ drivers you should be able to run any CUDA app up to 11.8. CUDA 12+ will require moving to CUDA 12+ compatible drivers. ____________  | |

| ID: 60099 | Rating: 0 | rate:

| |

I'm sure you're right, it's been years since I put more than on GPU on a computer.GPUgrid is set to only DL 2 WUs per computer. ACEMD WUs take around 12ish hours and have approxiamtely 50% GPU utilizationacemd3 has always used nearly 100% utilization with a single task on every GPU I've ever run. if you're only seeing 50%, sounds like you're hitting some other kind of bottleneck preventing the GPU from working to its full potential.[/quote]Let me rephrase that since it's been a long time since there was a steady flow of ACEMD. I always run 2 ACEMD WUs per GPU with no other GPU projects running. I can't remember what ACEMD utilization was but I don't recall that they slowed down much by running 2 WUs together. | |

| ID: 60100 | Rating: 0 | rate:

| |

|

maybe not much slower, but also not faster. | |

| ID: 60101 | Rating: 0 | rate:

| |

i would probably give more trust to nvidia's own tools. nvitop does that but graphs it. | |

| ID: 60102 | Rating: 0 | rate:

| |

maybe not much slower, but also not faster. But it has the advantage that compared to running a single ACEMD WU and letting the second GG sit idle waiting until it finishes and not getting the quick turnaround bonus feels like getting robbed :-) But who's counting? | |

| ID: 60103 | Rating: 0 | rate:

| |

|

until your 12h task turns into two 25hr tasks running two and you get robbed anyway. robbed of the bonus for two tasks instead of just one. | |

| ID: 60104 | Rating: 0 | rate:

| |

|

Picked up another ATM task but not holding much hope that it will run correctly based on the previous wingmen output files. Looks like the configuration is not correct again. | |

| ID: 60105 | Rating: 0 | rate:

| |

|

Does the ATM app work with RTX 4000 series? | |

| ID: 60106 | Rating: 0 | rate:

| |

Does the ATM app work with RTX 4000 series? Maybe. The Python app does, and the ATM is a similar kind of setup. You’ll have to try it and see. Not sure how much progress the project has made for Windows though. ____________  | |

| ID: 60107 | Rating: 0 | rate:

| |

I'm running Linux Mint 19 (a bit out of date)I just retired my last Linux Mint 19 computer yesterday and it had been running ATM, ACEMD & Python WUs on a 2080 Ti (12/7.5) fine. BTW, I tried the LM 21.1 upgrade from LM 20.3 and can't do things like open BOINC folder as admin. I can't see any advantage to 21.1 so I'm going to do a fresh install and revert back to 20.3. Glad to know someone else also has the same problem with Mint 21.1. I will shift to some other flavour. | |

| ID: 60108 | Rating: 0 | rate:

| |

|

Got my first ATM Beta. Completed and validated. | |

| ID: 60111 | Rating: 0 | rate:

| |

My observations show the GPU switching from periods of high utilization (~96-98%) to periods of idle (0%). About every minute or two. That sounds how ATM is intended to work for now. The idle GPU periods correspond to writing coordinates. Happy to know that size of the jobs are good! Picked up another ATM task but not holding much hope that it will run correctly based on the previous wingmen output files. Looks like the configuration is not correct again. I have seen your errors but I'm not sure why it's happening since I got several jobs running smoothly right now. I'll ask around. The new tag is a legacy part on my end about receptor naming. | |

| ID: 60120 | Rating: 0 | rate:

| |

|

Another heads-up, it seems that the Windows app will available soon! That way we'll be able to look into the progress reporting issue. | |

| ID: 60121 | Rating: 0 | rate:

| |

...it seems that the Windows app will available soon! that's good news - I'm looking foward to receiving ATM tasks :-) | |

| ID: 60123 | Rating: 0 | rate:

| |

|

I see that there is a windows app for ATM. But I have never received an app on any of my win machines, even with an updater. And yes, I have all the right project preferences set (everything checked). So, has anyone received an ATM task on a windows machine? | |

| ID: 60126 | Rating: 0 | rate:

| |

I see that there is a windows app for ATM. But I have never received an app on any of my win machines, even with an updater. And yes, I have all the right project preferences set (everything checked). So, has anyone received an ATM task on a windows machine? As far as I know, we are doing the final tests. I'll let you know once it's fully ready and I have the green light to send jobs through there. | |

| ID: 60128 | Rating: 0 | rate:

| |

I see that there is a windows app for ATM. But I have never received an app on any of my win machines, even with an updater. And yes, I have all the right project preferences set (everything checked). So, has anyone received an ATM task on a windows machine? do you have allow beta/test applications checked? ____________  | |

| ID: 60129 | Rating: 0 | rate:

| |

I see that there is a windows app for ATM. But I have never received an app on any of my win machines, even with an updater. And yes, I have all the right project preferences set (everything checked). So, has anyone received an ATM task on a windows machine? Yep. Are you saying that you have received windows tasks for ATM? ____________ Reno, NV Team: SETI.USA | |

| ID: 60130 | Rating: 0 | rate:

| |

I see that there is a windows app for ATM. But I have never received an app on any of my win machines, even with an updater. And yes, I have all the right project preferences set (everything checked). So, has anyone received an ATM task on a windows machine? no I don't run windows. i was just asking if you had the beta box selected because that's necessary. but looking at the server, some people did get them. someone else earlier in this thread reported that they got and processed one also. very few went out, so unless your system asked when they were available, it would be easy to miss. you can setup a script to ask for them regularly, BOINC will stop asking after so many requests with no tasks sent. ____________  | |

| ID: 60132 | Rating: 0 | rate:

| |

I see that there is a windows app for ATM. But I have never received an app on any of my win machines, even with an updater. And yes, I have all the right project preferences set (everything checked). So, has anyone received an ATM task on a windows machine? I've yet to get a Windoze ATMbeta. They've been available for a while this morning and still nothing. That GPU just sits with bated breath. What's the trick? | |

| ID: 60134 | Rating: 0 | rate:

| |

I see that there is a windows app for ATM. But I have never received an app on any of my win machines, even with an updater. And yes, I have all the right project preferences set (everything checked). So, has anyone received an ATM task on a windows machine? Yep. As I said, I have an updater script running as well. ____________ Reno, NV Team: SETI.USA | |

| ID: 60135 | Rating: 0 | rate:

| |

|

KAMasud got one on his Windows system. maybe he can share his settings. | |

| ID: 60136 | Rating: 0 | rate:

| |

|

Quico, Do you have some cryptic requirements specified for your Win ATMbeta WUs? | |

| ID: 60137 | Rating: 0 | rate:

| |

KAMasud got one on his Windows system. maybe he can share his settings. ____________________ Yes, I did get an ATM task. Completed and validated with success. No, I do not have any special settings. The only thing I do is not run any other project with GPU Grid. I have a feeling that they interfere with each other. How? GPU Grid is all over my cores and threads. Lacks discipline. My take on the subject. Admin, sorry. Even though resources are wasted, I am not after the credits. | |

| ID: 60138 | Rating: 0 | rate:

| |

|

I think it's just a matter of very few tests being submitted right now. Once I have the green light from Raimondas I'll start sending jobs through the windows app as well. | |

| ID: 60139 | Rating: 0 | rate:

| |

|

Still no checkpoints. Hopefully this is top of your priority list. | |

| ID: 60140 | Rating: 0 | rate:

| |

|

Done! Thanks for it. | |

| ID: 60141 | Rating: 0 | rate:

| |

|

There ate two different ATM apps on the server stats page, and also on the apps.php page. But in project preferences, there is only one ATM app listed. We need a way to select both/either in our project preferences. | |

| ID: 60142 | Rating: 0 | rate:

| |

|

Let it be. It is more fun this way. Never know what you will get next and adjust. | |

| ID: 60143 | Rating: 0 | rate:

| |

|

My new WU behaves differently but I don't think checkpointing is working. It reported the first checkpoint after a minute and after an hour has yet to report a second one. Progress is stuck at 0.2 but time remaining has decreased from 1222 days to 22 days. | |

| ID: 60144 | Rating: 0 | rate:

| |

|

I have started to get these ATM tasks on my windoze hosts. (unknown error) - exit code 195 (0xc3)</message> A script error? | |

| ID: 60145 | Rating: 0 | rate:

| |

I have started to get these ATM tasks on my windoze hosts. Hmmm I did send those this morning. Probably they entered the queue once my windows app was live and was looking for the run.bat. If that's the case expect many crashes incoming :_( The tests I'm monitoring seem to be still running so there's still hope | |

| ID: 60146 | Rating: 0 | rate:

| |

|

FWIW, this morning my windows machines started getting ATM tasks. Most of these tasks are erroring out. For these tasks, they have been issued many times over too many and failed every time. Looks like a problem with the tasks and not the clients running them. They will eventually work their way out of the system. But a few of the windows tasks I received today are actually working. Here is a successful example: | |

| ID: 60147 | Rating: 0 | rate:

| |

FWIW, this morning my windows machines started getting ATM tasks. Most of these tasks are erroring out. For these tasks, they have been issued many times over too many and failed every time. Looks like a problem with the tasks and not the clients running them. They will eventually work their way out of the system. But a few of the windows tasks I received today are actually working. Here is a successful example: -------------- Welcome Zombie67. If you are looking for more excitement, Climate has implemented OpenIFS. | |

| ID: 60148 | Rating: 0 | rate:

| |

|

All openifs tasks are already sent. | |

| ID: 60149 | Rating: 0 | rate:

| |

...But a few of the windows tasks I received today are actually working. I have one that is working, but I had to add ATMs to my appconfig file to get them to more accurately show the time remaining, due to what Ian pointed out way upthread. https://www.gpugrid.net/forum_thread.php?id=5379&nowrap=true#60041 I now see realistic time remaining. My current appconfig.xml script app_config> This task ran alongside a F@H task (project 18717) on a RTX3060 12GB card without any problem, in case anybody is interested. | |

| ID: 60150 | Rating: 0 | rate:

| |

|

Why not | |

| ID: 60151 | Rating: 0 | rate:

| |

|

So far, 2 WUs successfully completed, another one running. | |

| ID: 60152 | Rating: 0 | rate:

| |

|

it still can't run run.bat | |

| ID: 60153 | Rating: 0 | rate:

| |

|

progress reporting is still not working. | |

| ID: 60154 | Rating: 0 | rate:

| |

progress reporting is still not working. T_p38 were sent before the update so I guess it makes sense that they don't show reporting yet. Is the progress report for the BACE runs good? Is it staying stuck? | |

| ID: 60155 | Rating: 0 | rate:

| |

|

Yes, BACE looks good. | |

| ID: 60156 | Rating: 0 | rate:

| |

|

Hello Quico and everyone. Thank you for trying AToM-OpenMM on GPUGRID. | |

| ID: 60157 | Rating: 0 | rate:

| |

|

The python task must tell the boinc client how many ticks are to calculate (MAX_SAMPLES = 341 from *_asyncre.cntl times 22 replica) and the end of each tick. | |

| ID: 60158 | Rating: 0 | rate:

| |

|

The ATM tasks also record that a task has checkpointed in the job.log file in the slot directory (or did so, a few debug iterations ago - see message 60046). | |

| ID: 60159 | Rating: 0 | rate:

| |

|

The GPUGRID version of AToM: # Report progress on GPUGRID progress = float(isample)/float(num_samples - last_sample) open("progress", "w").write(str(progress)) which checks out as far as I can tell. last_sample is retrieved from checkpoints upon restart, so the progress % should be tracked correctly across restarts. | |

| ID: 60160 | Rating: 0 | rate:

| |

|

OK, the BACE task is running, and after 7 minutes or so, I see: 2023-03-24 15:40:33 - INFO - sync_re - Started: checkpointing 2023-03-24 15:40:49 - INFO - sync_re - Finished: checkpointing (duration: 15.699278543004766 s) 2023-03-24 15:40:49 - INFO - sync_re - Finished: sample 1 (duration: 303.5407383099664 s) in the run.log file. So checkpointing is happening, but just not being reported through to BOINC. Progress is 3.582% after eleven minutes. | |

| ID: 60161 | Rating: 0 | rate:

| |

|

Actually, it is unclear if AToM's GPUGRID version checkpoints after catching termination signals. I'll ask Raimondas. Termination without checkpointing is usually okay, but progress since the checkpoint would be lost, and the number of samples recorded in the checkpoint file would not reflect the actual number of samples recorded. | |

| ID: 60162 | Rating: 0 | rate:

| |

|

The app seems to be both checkpointing, and updating progress, at the end of each sample. That will make re-alignment after a pause easier, but there's always some over-run, and data lost on restart. It's up to the application itself to record the data point reached, and to be used for the restart, as an integral part of the checkpointing process. | |

| ID: 60163 | Rating: 0 | rate:

| |

|

Seriously? Only 14 tasks a day? GPUGRID 3/24/2023 9:17:44 AM This computer has finished a daily quota of 14 tasks | |

| ID: 60164 | Rating: 0 | rate:

| |

Seriously? Only 14 tasks a day? The quota adjusts dynamically - it goes up if you report successful tasks, and goes down if you report errors. | |

| ID: 60165 | Rating: 0 | rate:

| |

|

The T_PTP1B_new task, on the other hand, is not reporting progress, even though it's logging checkpoints in the run.log <active_task> <project_master_url>https://www.gpugrid.net/</project_master_url> <result_name>T_PTP1B_new_23484_23482_T3_2A_1-QUICO_TEST_ATM-0-1-RND3714_3</result_name> <checkpoint_cpu_time>10.942300</checkpoint_cpu_time> <checkpoint_elapsed_time>30.176729</checkpoint_elapsed_time> <fraction_done>0.001996</fraction_done> <peak_working_set_size>8318976</peak_working_set_size> <peak_swap_size>16592896</peak_swap_size> <peak_disk_usage>1318196036</peak_disk_usage> </active_task> The <fraction done> is reported as the 'progress%' figure - this one is reported as 0.199% by BOINC Manager (which truncates) and 0.200% by other tools (which round). This task has been running for 43 minutes, and boinc_task_state.xml hasn't been re-written since the first minute. | |

| ID: 60166 | Rating: 0 | rate:

| |

|

| |

| ID: 60167 | Rating: 0 | rate:

| |

|

| |

| ID: 60168 | Rating: 0 | rate:

| |

|

My BACE task 33378091 finished successfully after 5 hours, under Linux Mint 21.1 with a GTX 1660 Super. | |

| ID: 60169 | Rating: 0 | rate:

| |

|

Task 27438853 | |

| ID: 60170 | Rating: 0 | rate:

| |

Right, probably the wrapper should send a termination signal to AToM. We have of course access to AToM's sources https://github.com/Gallicchio-Lab/AToM-OpenMM and we can make sure that it checkpoints appropriately when it receives the signal. However, I do not have access to the wrapper. Quico: please advise. | |

| ID: 60171 | Rating: 0 | rate:

| |

|

Hi, i have some "new_2" ATMs that run for 14h+ yet. Should i abort them? | |

| ID: 60172 | Rating: 0 | rate:

| |

|

The wrapper you're using at the moment is called "wrapper_26198_x86_64-pc-linux-gnu" (I haven't tried ATM under Windows yet, but can and will do so when I get a moment). 20:37:54 (115491): wrapper (7.7.26016): starting That would put the date back to around November 2015, but I guess someone has made some local modifications. | |

| ID: 60173 | Rating: 0 | rate:

| |

Hi, i have some "new_2" ATMs that run for 14h+ yet. Should i abort them? I have one at the moment which has been running for 17.5 hours. The same machine completed one yesterday (task 33374928) which ran for 19 hours. I wouldn't abort it just yet. | |

| ID: 60174 | Rating: 0 | rate:

| |

Hi, i have some "new_2" ATMs that run for 14h+ yet. Should i abort them? thank you. I will let them running =) | |

| ID: 60175 | Rating: 0 | rate:

| |

|

And completed. | |

| ID: 60176 | Rating: 0 | rate:

| |

Seriously? Only 14 tasks a day? Quico, This behavior is intended to block misconfigured computers. In this case it's your Windows version that fails in seconds and being resent until it hits a Linux computer or fails 7 times. My Win computer was locked out of GG early yesterday but all my Linux computers donated until WUs ran out. In this example the first 4 failures all went to Win7 & 11 computers and then Linux completed it successfully: https://www.gpugrid.net/workunit.php?wuid=27438768 And the Win WUs are failing in seconds again with today's tranche. | |

| ID: 60177 | Rating: 0 | rate:

| |

|

WUs failing on Linux computers: + python -m pip install git+https://github.com/raimis/AToM-OpenMM.git@172e6db924567cd0af1312d33f05b156b53e3d1c Running command git clone --filter=blob:none --quiet https://github.com/raimis/AToM-OpenMM.git /var/lib/boinc-client/slots/36/tmp/pip-req-build-jsq34xa4 fatal: unable to access '/home/conda/feedstock_root/build_artifacts/git_1679396317102/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placeho/etc/gitconfig': Permission denied error: subprocess-exited-with-error × git clone --filter=blob:none --quiet https://github.com/raimis/AToM-OpenMM.git /var/lib/boinc-client/slots/36/tmp/pip-req-build-jsq34xa4 did not run successfully. │ exit code: 128 ╰─> See above for output. note: This error originates from a subprocess, and is likely not a problem with pip. error: subprocess-exited-with-error https://www.gpugrid.net/result.php?resultid=33379917 | |

| ID: 60183 | Rating: 0 | rate:

| |

|

Any ideas why WUs are failing on a linux ubuntu machine with gtx1070? <core_client_version>7.20.5</core_client_version> | |

| ID: 60184 | Rating: 0 | rate:

| |

(I haven't tried ATM under Windows yet, but can and will do so when I get a moment). Just downloaded a BACE task for Windows. There may be trouble ahead... The job.xml file reads: <job_desc> <unzip_input> <zipfilename>windows_x86_64__cuda1121.zip</zipfilename> </unzip_input> <task> <application>python.exe</application> <command_line>bin/conda-unpack</command_line> <weight>1</weight> </task> <task> <application>Library/usr/bin/tar.exe</application> <command_line>xjvf input.tar.bz2</command_line> <setenv>PATH=$PWD/Library/usr/bin</setenv> <weight>1</weight> </task> <task> <application>C:/Windows/system32/cmd.exe</application> <command_line>/c call run.bat</command_line> <setenv>CUDA_DEVICE=$GPU_DEVICE_NUM</setenv> <stdout_filename>run.log</stdout_filename> <weight>1000</weight> <fraction_done_filename>progress</fraction_done_filename> </task> </job_desc> 1) We had problems with python.exe triggering a missing DLL error. I'll run Dependency Walker over this one, to see what the problem is. 2) It runs a private version of tar.exe: Microsoft included tar as a system utility from Windows 10 onwards - but I'm running Windows 7. The MS utility wouldn't run for me - I'll try this one. 3) I'm not totally convinced of the cmd.exe syntax either, but we'll cross that bridge when we get to it. | |

| ID: 60185 | Rating: 0 | rate:

| |

|

First reports from Dependency Walker: | |

| ID: 60186 | Rating: 0 | rate:

| |

|

Just a note of warning: one of my machines is running a JNK1 task - been running for 13 hours. MAX_SAMPLES = 341 One reason why this needs fixing: I have my BOINC client set up in such a way that it normally fetches the next task around an hour before the current one is expected to finish. Because this one was (apparently) running so fast, it reached that point over five hours ago - and it's still waiting. Sorry Abouh - your next result will be late! | |

| ID: 60188 | Rating: 0 | rate:

| |

|

I also noticed this latest round of BACE tasks have become much longer to run on my GPUs. Some are hitting > 24 hrs. I am going to stop taking new ones unless the # samples/task is trimmed down. | |

| ID: 60189 | Rating: 0 | rate:

| |

|

I had this one running for about 8 hours, but then i had to shut down my computer. | |

| ID: 60190 | Rating: 0 | rate:

| |

|

Forget about a re-start, these WUs cannot even take a suspension. I suspended my computer and this WU collapsed. | |

| ID: 60191 | Rating: 0 | rate:

| |

|

i'm a bit surprised right now, i looked at the resend, it was successfully completed in just over 2 minutes, how come? the computer has more WUs that were successfully completed in such a short time. Am I doing something wrong? | |

| ID: 60192 | Rating: 0 | rate:

| |

I also noticed this latest round of BACE tasks have become much longer to run on my GPUs. Some are hitting > 24 hrs. I am going to stop taking new ones unless the # samples/task is trimmed down. I agree, the 4-6hr runs are much better. ____________  | |

| ID: 60193 | Rating: 0 | rate:

| |

|

I have task that reached 100% an hour ago, which means it is suppose to be finished, but it's still running............. | |

| ID: 60194 | Rating: 0 | rate:

| |

|

My last ATM tasks spent at least a couple of hours at the 100% completion point. | |

| ID: 60195 | Rating: 0 | rate:

| |

|

That's a mute point now. It errored out. | |

| ID: 60196 | Rating: 0 | rate:

| |

|

It looks like you got bit by a permission error. | |

| ID: 60197 | Rating: 0 | rate:

| |

It looks like you got bit by a permission error. The Boinc version is 7.20.7. https://www.gpugrid.net/hosts_user.php?userid=19626 | |

| ID: 60198 | Rating: 0 | rate:

| |

|

Another task failed. | |

| ID: 60199 | Rating: 0 | rate:

| |

|

The output file will always be absent if the task fails - it doesn't get as far as writing it. The actual error is in the online report: ValueError: Energy is NaN. ('Not a Number') That's a science problem - not your fault. | |

| ID: 60200 | Rating: 0 | rate:

| |

|

I've seen that you are unhappy with the last batch of runs. Seeing that they take too much time. I've been playing to divide the runs in different steps to get a sweet spot that you're happy with it and it's not madness for me to organize all this runs and re-runs. I'll backtrack to the previous setting we had before. Apologies for that.

I'll ask Raimondas about this and the other things that have been mentioned since he's the one taking care of this issue. | |

| ID: 60201 | Rating: 0 | rate:

| |

|

I've read some people mentioning that the reporter doesn't work or that it goes over 100%. Does it work correctly for someone? | |

| ID: 60202 | Rating: 0 | rate:

| |

I've read some people mentioning that the reporter doesn't work or that it goes over 100%. Does it work correctly for someone? It varies from task to task - or, I suspect, from batch to batch. I mentioned a specific problem with a JNK1 task - task 33380692 - but it's not a general problem. I suspect that it may have been a specific problem with setting the data that drives the progress %age calculation - the wrong expected 'total number of samples' may have been used. | |

| ID: 60203 | Rating: 0 | rate:

| |

I've read some people mentioning that the reporter doesn't work or that it goes over 100%. Does it work correctly for someone? This one is a rerun, meaning that 2/3 of the run were previously simulated. Maybe it was expecting to start from 0 samples and once it saw that we're at 228 from the beginning, it got confused. I'll comment that. PS: But others runs have been reporting correctly? | |

| ID: 60204 | Rating: 0 | rate:

| |

|

https://www.gpugrid.net/result.php?resultid=33382097 | |

| ID: 60205 | Rating: 0 | rate:

| |

|

see post https://www.gpugrid.net/forum_thread.php?id=5379&nowrap=true#60160 | |

| ID: 60206 | Rating: 0 | rate:

| |

|

Or possibly progress = float(isample - last_sample)/float(num_samples - last_sample) if you want a truncated resend to start from 0% - but might that affect paused/resumed tasks as well? | |

| ID: 60207 | Rating: 0 | rate:

| |

|

None of my WUs from yesterday completed. Please issue a server abort and eliminate all these defective WUs before releasing a new set. Otherwise defects will keep wasting 8 computers time for days to come. | |

| ID: 60208 | Rating: 0 | rate:

| |

The problem is not the time they take to run. I agree with this. I had one error out on a restart two days ago after reaching nearly 100% due to no checkpoints. Not only that, but it then only showed 37 seconds of CPU time, so it doesn’t show what really happened. My latest one did complete but showed no check points. Therefore the long run time of is more of a high risk for a potential interruption. | |

| ID: 60209 | Rating: 0 | rate:

| |

None of my WUs from yesterday completed. Please issue a server abort and eliminate all these defective WUs before releasing a new set. Otherwise defects will keep wasting 8 computers time for days to come. ______________ My problem with re-start and suspending is, these WUs are GPU intensive. As soon as one of these WUs pops up, my GPU fans let me know to do maintenance of the cooling system. I have laptops. I cannot take a blower on a running system. Now this WU for example has run for 21 hours and is at 34.5%. task 27440346 Edit. It is still running fine. | |

| ID: 60210 | Rating: 0 | rate:

| |

It looks like you got bit by a permission error. Not your fault, I got a couple errored tasks that duplicated yours. Just a bad batch of tasks went out. | |

| ID: 60211 | Rating: 0 | rate:

| |

|

I have problem with cmd. It exits with code 1 in 0 seconds. | |

| ID: 60212 | Rating: 0 | rate:

| |

|

I've got another very curious one. | |

| ID: 60213 | Rating: 0 | rate:

| |

None of my WUs from yesterday completed. Please issue a server abort and eliminate all these defective WUs before releasing a new set. Otherwise defects will keep wasting 8 computers time for days to come. _____________________________ The above-mentioned WU is at 71.8% and has been running now for 1 Day and 20 hours. It is still running fine and as I cannot read log files, you can go over what it has been doing once finished. I have marked no further WUs from GPUgrid. I will re-open after updates, etc which I have forced-paused. | |

| ID: 60214 | Rating: 0 | rate:

| |

|

Looked at the errored tasks list on my account this morning and see another slew of badly misconfigured tasks went out. | |

| ID: 60215 | Rating: 0 | rate:

| |

None of my WUs from yesterday completed. Please issue a server abort and eliminate all these defective WUs before releasing a new set. Otherwise defects will keep wasting 8 computers time for days to come. ________________ Completed after two days, four hours and forty minutes. Now there is another problem. One task is showing 100% completed for the last four hours but it is still using the CPU for something. Not the GPU. The elapsed clock is still ticking but the remaining is zero. | |

| ID: 60216 | Rating: 0 | rate:

| |

|

This task PTP1B_23471_23468_2_2A-QUICO_TEST_ATM-0-1-RND8957_1 is currently doing the same on this host. | |

| ID: 60218 | Rating: 0 | rate:

| |

|

This task reached "100% complete" in about 7 hours, and then ran for an additional 7 hours +, before actually finishing. | |

| ID: 60219 | Rating: 0 | rate:

| |

Anybody got that beat?????? The task I reported in Message 60213 (14:55 yesterday) is still running. It was approaching 100% when I went to bed last night, and it's still there this morning. I'll go and check it out after coffee (I can't see the sample numbers remotely). As soon as I wrote that, it uploaded and reported! Ah well, my other Linux machine has got one in the same state. | |

| ID: 60220 | Rating: 0 | rate:

| |

None of my WUs from yesterday completed. Please issue a server abort and eliminate all these defective WUs before releasing a new set. Otherwise defects will keep wasting 8 computers time for days to come. _________________ Just woke up. The task was finished. Sent it home. task 27441741 | |

| ID: 60223 | Rating: 0 | rate:

| |

|

OK, it's the same story as yesterday. This task: | |

| ID: 60224 | Rating: 0 | rate:

| |

OK, it's the same story as yesterday. This task: I believe it's what I imagined. From the manual division I was doing before I was splitting some runs in 2/3 steps: 114 - 228 - 341 samples. If the job ID has a 2A/3A it's most probably that it's starting from a previous checkpoint and the progress report is going crazy with it. I'll pass this on to Raimondas to see if he can get a look at it. Our priority first is to be able to that these job divisions are done automatically like ACEMD does, that way we can avoid these really long jobs for everyone. Doing this manually makes it really hard to track all the jobs and the resends. So I hope that in the next days everything goes smoother. | |

| ID: 60225 | Rating: 0 | rate:

| |

|

Thanks. Now I know what I'm looking for (and when), I was able to watch the next transition. | |

| ID: 60226 | Rating: 0 | rate:

| |

The %age intervals match the formula in Emilio Gallicchio's post 60160 (115/(341-114)), but I can't see where the initial big value of 50.983 comes from. 115/(341-114) = 0.5066 = 50.66% strikingly close. maybe "BOINC logic" in some form of rounding. but it's pretty clear that the 50% value is coming from this calculation. ____________  | |

| ID: 60227 | Rating: 0 | rate:

| |

|

I thought I'd checked that, and got a different answer, but my mouse must have slipped on the calculator buttons. | |

| ID: 60228 | Rating: 0 | rate:

| |

|

After that, it failed after 3 hours 20 minutes with a 'ValueError: Energy is NaN' error. Never mind - I tried. | |

| ID: 60229 | Rating: 0 | rate:

| |

|

C:/Windows/system32/cmd.exe command creates c:\users\frolo\.exe\ folder. | |

| ID: 60230 | Rating: 0 | rate:

| |

Thanks. Now I know what I'm looking for (and when), I was able to watch the next transition. The first 114 samples should be calculated by: T_PTP1B_new_20669_2qbr_23472_1A_3-QUICO_TEST_ATM-0-1-RND2542_0.tar.bz2 I've been doing all the division and resends manually and we've been simplifying the naming convention for my sake. Now we are testing a multiple_steps protocol just like in AceMD which should help ease things and I hope mess less with the progress reporter. | |

| ID: 60233 | Rating: 0 | rate:

| |

|

Thanks. Be aware that out here in client-land we can only locate jobs by WU or task ID numbers - it's extremely difficult to find a task by name unless we can follow an ID chain. | |

| ID: 60234 | Rating: 0 | rate:

| |

|

Yeah I'm sorry about that. I'm trying to learn as I go. | |

| ID: 60237 | Rating: 0 | rate:

| |

|

Two downloaded, the first has reached 6% with no problems. | |

| ID: 60238 | Rating: 0 | rate:

| |

Yeah I'm sorry about that. I'm trying to learn as I go. ____________________ It is un-stable tasks, re-start problems, suspend problems. Quite a few of us have done year-plus runs on Climate. 24-hour runs are no problem. | |

| ID: 60239 | Rating: 0 | rate:

| |

|

deleted | |

| ID: 60240 | Rating: 0 | rate:

| |

|

I believe I just finished one of these ATMbeta tasks. | |

| ID: 60241 | Rating: 0 | rate:

| |

I believe I just finished one of these ATMbeta tasks. Same for me with Linux. Since there's no checkpointing I didn't bother to test suspending. I think all windows WUs failed. | |

| ID: 60242 | Rating: 0 | rate:

| |

|

My current two ATM betas both have MAX_SAMPLES: +70 - but one started at 71, and the other at 141. | |

| ID: 60243 | Rating: 0 | rate:

| |

My current two ATM betas both have MAX_SAMPLES: +70 - but one started at 71, and the other at 141. My observations are same. When the units download, the estimated finish time reads 606 days. https://www.gpugrid.net/results.php?hostid=534811&offset=0&show_names=0&state=0&appid=45 So far, in this batch, 3 WUs completed successfully, 1 error and 1 is crunching, on a windows 10 machine. The units all crash on my other computer, which runs windows 7 and is rather old, 13 years. Maybe, it's time to retire it from this project, though it still runs well on other projects, like Einstein and FAH. https://www.gpugrid.net/results.php?hostid=544232&offset=0&show_names=0&state=0&appid=45 | |

| ID: 60244 | Rating: 0 | rate:

| |

|

My first ATM beta on Windows10 failed after some 6 hours :-( | |

| ID: 60245 | Rating: 0 | rate:

| |

anyone an idea what exactly the problem was? It says ValueError: Energy is NaN. A science error (impossible result), rather than a computing error. | |

| ID: 60246 | Rating: 0 | rate:

| |

|

Potentially, it could also be due to instability in overclocks, where applicable. I know the ACEMD3 tasks are susceptible to a “particle coordinate is NaN” type error from too much overclocks. | |

| ID: 60247 | Rating: 0 | rate:

| |

Potentially, it could also be due to instability in overclocks, where applicable. I know the ACEMD3 tasks are susceptible to a “particle coordinate is NaN” type error from too much overclocks. thanks for this thought; it could well be the case. For some time, this old GTX980TI has no longer followed the settings for GPU clock and Power target, in the old NVIDIA Inspector as well as in the newer Afterburner. Hence, particularly with ATM tasks I noticed an overclocking from default 1152MHz up to 1330MHz. Not all the time, but many times. I now experimented and found out that I can control the GPU clock by reducing the fan speed, with setting the GPU temperature at a fixed value and setting a check at "priorize temperature". So the clock now oscillates around 1.100MHz most of the time. I will see whether the ATM tasks now will fail again, or not. | |

| ID: 60248 | Rating: 0 | rate:

| |

|

My atm beta tasks crash. | |

| ID: 60249 | Rating: 0 | rate:

| |

|

me too. | |

| ID: 60250 | Rating: 0 | rate:

| |

|

Something in your Windows configuration has a problem running cmd.exe and calling the run.bat file. Windows barfs on the 0x1 exit error. | |

| ID: 60251 | Rating: 0 | rate:

| |

|

Another thing that can be possible is that your system re-started after an update or you suspended it. | |

| ID: 60254 | Rating: 0 | rate:

| |

|

The ATM tasks are just like the acemd3 tasks in that they can't be interrupted or restarted without erroring out. Unlike the acemd3 tasks which can be restarted on the same device, the ATM tasks can't be restarted or interrupted at all. They exit immediately if restarted. | |

| ID: 60256 | Rating: 0 | rate:

| |

The ATM tasks are just like the acemd3 tasks in that they can't be interrupted or restarted without erroring out. Unlike the acemd3 tasks which can be restarted on the same device, the ATM tasks can't be restarted or interrupted at all. They exit immediately if restarted. I agree, I have lost quite a few hours on WU's that were going to complete because I had perform reboots and lost them. Is anyone addressing this issue yet? | |

| ID: 60257 | Rating: 0 | rate:

| |

|

Haven't heard or seen any comments by any of the devs. The acemd3 app hasn't been fixed in two years. And that is an internal application by Acellera. | |

| ID: 60258 | Rating: 0 | rate:

| |

|

it is just interesting that acemd3 runs through and ATM does not. errors appear after a few minutes | |

| ID: 60259 | Rating: 0 | rate:

| |

it is just interesting that acemd3 runs through and ATM does not. errors appear after a few minutes If you're time-slicing with another GPU project that will cause a fatal "computation error" when BOINC switches between them. | |

| ID: 60260 | Rating: 0 | rate:

| |

|

Task failed. | |

| ID: 60261 | Rating: 0 | rate:

| |

|

Beta or not...how can a Project send out tasks with this length of runtime and not have any checkpointing of some sort. | |

| ID: 60264 | Rating: 0 | rate:

| |

|

I concur with bluestang, some of those likely successful WU's I lost had 20+ hours getting wasted because of a necessary reboot. Project owners should make some sort of a fix a priority. | |

| ID: 60266 | Rating: 0 | rate:

| |

|

Or just acknowledge you aren't willing to accept the project limitations and move onto other gpu projects that fit your usage conditions. | |

| ID: 60267 | Rating: 0 | rate:

| |

Or just acknowledge you aren't willing to accept the project limitations and move onto other gpu projects that fit your usage conditions. ________________ Do you know what the problem is? Quico has not understood what Abou did at the very start. I am pretty sure whatever it is if he brings it to the thread he will find an answer. There are a lot of people on the thread and one of them is you, who are willing to help to the best of their ability. Experts. I have paused all Microsoft Updates for five weeks which seems to trigger the rest of the updates, like Intels. Just for these WUs. | |

| ID: 60268 | Rating: 0 | rate:

| |

|

But abouh's app is different from Quico's. They use different external tools. You can't apply the same fixes that abouh did for Quico's app. | |

| ID: 60269 | Rating: 0 | rate:

| |

But abouh's app is different from Quico's. They use different external tools. You can't apply the same fixes that abouh did for Quico's app. ___________________ I am not saying anything but I agree with the sentiments of some. Maybe, some of us can play with AToM libs. | |

| ID: 60270 | Rating: 0 | rate:

| |

|

Having looked into the internal logging of Quico's tasks in some detail because of the progress %age problem, it's clear that it goes through the motions of writing a checkpoint normally - 70 times per task for the recent short runs, 341 per task for the very long ones. That's about once every five minutes on my machines, which would be perfectly acceptable to me. | |

| ID: 60271 | Rating: 0 | rate:

| |

|

Impressive. | |

| ID: 60273 | Rating: 0 | rate:

| |

|

anyone any idea why this task: | |

| ID: 60274 | Rating: 0 | rate:

| |

|

ValueError: Energy is NaN. IOW Not a number. | |

| ID: 60275 | Rating: 0 | rate:

| |

|

All WUs seems to be failing the same way with missing files : | |

| ID: 60276 | Rating: 0 | rate:

| |

|

We see this frequently with misconfigured tasks. Researcher does a poor job updating the task generation template when configuring for new tasks. | |

| ID: 60277 | Rating: 0 | rate:

| |

seems to be failing the same way with missing files Same here: https://www.gpugrid.net/result.php?resultid=33406558 But first failed among dozen successful completed. | |

| ID: 60278 | Rating: 0 | rate:

| |

Wastes time and resources for every one. Well, as long as a tasks fails within a few minutes (I had a few such ones yesterday), I think it's not that bad. But I had one, day before yesterday, which failed after some 5-1/2 hours - which is not good :-( | |

| ID: 60279 | Rating: 0 | rate:

| |

|

task 27451592 | |

| ID: 60281 | Rating: 0 | rate:

| |

|

Thought I'd run a quick test to see if there was any progress on the restart front. Waited until a task had just finished, and let a new one start and run to the first checkpoint: then paused it, and waited while another project borrowed the GPU temporarily. Running command git clone --filter=blob:none --quiet https://github.com/raimis/AToM-OpenMM.git /hdd/boinc-client/slots/2/tmp/pip-req-build-368b4spp fatal: unable to access '/home/conda/feedstock_root/build_artifacts/git_1679396317102/_h_env_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placehold_placeho/etc/gitconfig': Permission denied That doesn't sound very hopeful. It's still a problem. | |

| ID: 60282 | Rating: 0 | rate:

| |

task 27451592 _______________________________ task 27451763 task 27451117 task 27452961 Completed and validated. No errors as yet. I dare not even sneeze near them. All updates are off. | |

| ID: 60283 | Rating: 0 | rate:

| |

|

This "OFF" in the WU points towards Python. "AToM" also has something to do with Python. | |

| ID: 60284 | Rating: 0 | rate:

| |

|

I think 'Python' is a programming language, and 'AToM' is a scientific program written in that language. | |

| ID: 60285 | Rating: 0 | rate:

| |

Well, as long as a tasks fails within a few minutes (I had a few such ones yesterday), I think it's not that bad. what I noticed lately on my machines is: when ATM tasks fail, mostly after 60-90 seconds. And stderr always says: FileNotFoundError: [Errno 2] No such file or directory: 'thrombin_noH_2-1a-3b_0.xml' 23:18:10 (18772): C:/Windows/system32/cmd.exe exited; CPU time 18.421875 see here: https://www.gpugrid.net/result.php?resultid=33409106 | |

| ID: 60286 | Rating: 0 | rate:

| |

task 27451592 task 27452387 task 27452312 task 27452961 task 27452969 completed and validated. One task in error, task 33410323 | |

| ID: 60287 | Rating: 0 | rate:

| |

|

Similar story. MCL1_49_35_4-QUICO_ATM_OFF_STEPS-0-5-RND5875_2 failed in 44 seconds with FileNotFoundError: [Errno 2] No such file or directory: 'MCL1_pmx-49-35_0.xml' | |

| ID: 60289 | Rating: 0 | rate:

| |

|

Has anyone noticed the WUs with 'Bace' in their name, they show progress as 100% but the Time Elapsed counter is still ticking. Task Manager shows the task is still busy computing. This goes on for hours on end and one Task went up to 24 Hrs in this state. | |

| ID: 60290 | Rating: 0 | rate:

| |

Similar story. MCL1_49_35_4-QUICO_ATM_OFF_STEPS-0-5-RND5875_2 failed in 44 seconds with same here, about 1 hour ago: FileNotFoundError: [Errno 2] No such file or directory: 'MCL1_pmx-30-40_0.xml' such errors, happening often enough, may show some kind of sloppy tasks configuration ? | |

| ID: 60291 | Rating: 0 | rate:

| |

Similar story. MCL1_49_35_4-QUICO_ATM_OFF_STEPS-0-5-RND5875_2 failed in 44 seconds with __________________ Same here. The Task with 'MCLI' in their name lasted 18 seconds only. task 33411408 | |

| ID: 60292 | Rating: 0 | rate:

| |

|

This WU with 'Jnk1' in it, lasted ten seconds. | |

| ID: 60293 | Rating: 0 | rate:

| |

|

This 'MCL1' has been running steadily for the last hour. But it is showing progress as 100% while the elapsed clock is ticking. Task Manager shows it is busy. | |

| ID: 60294 | Rating: 0 | rate:

| |

But it is showing progress as 100% So with all ATM WUs, this is "normal". Perhaps later the devs will be able to fix it. So there is no need to be surprised by this fact in every post -_- | |

| ID: 60295 | Rating: 0 | rate:

| |

This WU with 'Jnk1' in it, lasted ten seconds. _______________ Completed and validated. No. For some reason, people are aborting, like this WU 'thrombin'. We normally watch the progress report. Instead, check the Task Manager. If there is a heartbeat, let it run. | |

| ID: 60296 | Rating: 0 | rate:

| |

This 'MCL1' has been running steadily for the last hour. But it is showing progress as 100% while the elapsed clock is ticking. Task Manager shows it is busy. _______________ Completed and validated. Auram? | |

| ID: 60297 | Rating: 0 | rate:

| |

|

When tasks are available how much percentage of CPU do they require, does the CPU usage fluctuate like the other Python tasks? | |

| ID: 60301 | Rating: 0 | rate:

| |

When tasks are available how much percentage of CPU do they require, does the CPU usage fluctuate like the other Python tasks? One CPU is plenty for these tasks. It doesn't need a full GPU so I run Einstein, Milkyway or OPNG with it. Problem is if BOINC time slices it the ATM WU will fail when it gets restarted. Unless it time-sliced due to the final step (zipping up maybe?) after several hours. Then when it UL and Report as Valid. The best way to assure these ATM WUs succeed is to not run a different project to avoid having BOINC switch the GPU and crash it when it restarts. Running 2 ATM WUs per GPU or an ACEMD+ATM is ok since it doesn't switch away. | |

| ID: 60302 | Rating: 0 | rate:

| |

This 'MCL1' has been running steadily for the last hour. But it is showing progress as 100% while the elapsed clock is ticking. Task Manager shows it is busy._______________ Yes the failed WU is my Rig-11 which is having intermittent failures/reboots due to a MB/GPU issue of unknown origin. I've swapped GPUs several times and the problem stays with the Rig-11 MB so it's not a bad GPU. If I leave the GPU idle the CPU runs WUs fine. Einstein and Milkyway don't seem to cause the problem but Asteroids, GG and maybe OPNG do at random intervals. Also it might be time-slicing that I described in my penultimate reply. It's probably time to scrap the MB. Since most are designed for gamers they stuff too much junk on them and compromise their reliability. | |

| ID: 60303 | Rating: 0 | rate:

| |

|

Looks like all of today's WUs are failing: FileNotFoundError: [Errno 2] No such file or directory: 'CDK2_new_2_edit-1oiy-1h1q_0.xml' It dumbfounds me why they still have it set to fail 7 times. If they fail at the end then that's several days of compute time wasted. Isn't two failures enough? | |

| ID: 60304 | Rating: 0 | rate:

| |

|

I had two fail in this way, but the rest (20+ or so) are running fine. Certainly not "all" of them. | |

| ID: 60305 | Rating: 0 | rate:

| |

|

Strange enough, about 2 hours ago one of my rigs downloaded 2 ATM tasks, while Python tasks were running. | |

| ID: 60306 | Rating: 0 | rate:

| |

|

I think the beta toggle in preferences is 'sticky' in the scheduler. | |

| ID: 60307 | Rating: 0 | rate:

| |

Strange enough, about 2 hours ago one of my rigs downloaded 2 ATM tasks, while Python tasks were running. I think ATMbeta is controlled by Run test applications? | |

| ID: 60308 | Rating: 0 | rate:

| |

I think ATMbeta is controlled by oh, this might explain. While I unchecked "ATM beta" I neglected to uncheck "Run test applications" | |

| ID: 60309 | Rating: 0 | rate:

| |

|

This WU had me error out for NAN at 913 seconds. I never overclock my GPUs and power limited this 2080 Ti to 180 W since GPUs are notorious for wasting energy. This NAN error is due setting the calculation boundaries wrong. | |

| ID: 60310 | Rating: 0 | rate:

| |

|

Hello Quico, | |

| ID: 60312 | Rating: 0 | rate:

| |

|

File "/var/lib/boinc-client/slots/34/lib/python3.9/site-packages/openmm/app/statedatareporter.py", line 365, in _checkForErrors https://www.gpugrid.net/workunit.php?wuid=27469907raise ValueError('Energy is NaN. For more information, see https://github.com/openmm/openmm/wiki/Frequently-Asked-Questions#nan') ValueError: Energy is NaN. Watched a WU finish and it spent 6 minutes out of 313 minutes on 100%. No checkpointing. Has it been confirmed that the calculation boundaries are correct and not the cause of the NaN errors? | |

| ID: 60319 | Rating: 0 | rate:

| |

|

I, too, had such an error after the task had run for 7.885 seconds: | |

| ID: 60320 | Rating: 0 | rate:

| |

Wow! Six minutes is a significant improvement over the hours it was taking before. Just don't give it a kick and abort. | |

| ID: 60321 | Rating: 0 | rate:

| |

|

Too bad, not so simple after all. I wrote the checkpoint tag in the job.xml in the project directory under Windows and after two samples suspend/resume and again the job started with the first task and died with python pip. | |

| ID: 60322 | Rating: 0 | rate:

| |

I, too, had such an error after the task had run for 7.885 seconds: this time, the task errored out after 16.400 seconds :-( https://www.gpugrid.net/result.php?resultid=33442242 | |

| ID: 60333 | Rating: 0 | rate:

| |

|

It feels like there's at least four categories of ATMbeta WUs running simultaneously. | |

| ID: 60335 | Rating: 0 | rate:

| |

|

My Nation like many others has gone into a default situation. The most expensive item is the supply of electricity and they are frequently switching off the grid without informing us. | |

| ID: 60336 | Rating: 0 | rate:

| |

|

Still no checkpointing. | |

| ID: 60352 | Rating: 0 | rate:

| |

|

If there is a storm and electricity go, WU crashes. I know that Boincer's do not do a re-start for months on end but I have to do a re-start. WU crashes. If the GPU updates or the System updates, the WU crashes. If the cat plays with the keyboard, the WU crashes. | |

| ID: 60353 | Rating: 0 | rate:

| |

Who cares. No, it's not about who cares. This is about which of the project employees has the knowledge and resources to implement the necessary functionality, and which of them has the time for this. And as you should understand, they don't make decisions there on their own, it's not a hobby. The necessary specialists can now be involved in other, more priority projects for the institute, and neither we nor the employees themselves can influence this. Deal with it. Nothing will change from the number of tearful posts about the problem, no matter how much someone would like it. Unless, of course, the goal is once again just to let off steam somewhere because of indignation. | |

| ID: 60354 | Rating: 0 | rate:

| |

|

Task TYK2_m44_m55_5_FIX-QUICO_ATM_Sage_xTB-0-5-RND2847_0 (today): FileNotFoundError: [Errno 2] No such file or directory: 'TYK2_m44_m55_0.xml' Later - CDK2_miu_m26_4-QUICO_ATM_Sage_xTB-0-5-RND8419_0 running OK. | |

| ID: 60357 | Rating: 0 | rate:

| |

|

And a similar batch configuration error with today's BACE run, like 08:05:32 (386384): wrapper: running bin/bash (run.sh) (five so far) Edit - now wasted 20 of the things, and switched to Python to avoid quota errors. I should have dropped in to give you a hand when passing through Barcelona at the weekend! | |

| ID: 60358 | Rating: 0 | rate:

| |

|

I cannot resource share ATMBeta with other projects because it is stopped to run other projects. Ends up with an error. | |

| ID: 60359 | Rating: 0 | rate:

| |

And a similar batch configuration error with today's BACE run, like Same for Win apps: https://www.gpugrid.net/result.php?resultid=33475629 https://www.gpugrid.net/results.php?userid=101590 Sad : / | |

| ID: 60360 | Rating: 0 | rate:

| |

I cannot resource share ATMBeta with other projects because it is stopped to run other projects. Ends up with an error. set all other GPU projects to resource share of 0, then they wont run at all when you have ATM work. ____________  | |

| ID: 60361 | Rating: 0 | rate:

| |

|

many of the recent ATMs errored out after not even a minute, stderr says: | |

| ID: 60362 | Rating: 0 | rate:

| |

|

Same equivalent type of error in Linux for a great many tasks. | |

| ID: 60363 | Rating: 0 | rate:

| |

|

Got a collection of twenty-one errored tasks. Suspended work fetch on that computer. The other is busy with Abous WU. | |

| ID: 60364 | Rating: 0 | rate:

| |

|

Now these are doing it as well: MCL1_m28_m47_1_FIX-QUICO_ATM_Sage_xTB-0-5-RND0954_0 18:09:56 (394275): wrapper: running bin/bash (run.sh) The experimenters and/or staff have got to get a grip on this - you are wasting everybody's time and electricity. BOINC is very unforgiving: you have to get it 100% exact, all at the same time, every time. It's worth you taking a pause after each new batch is prepared, and then going back and proof-reading the configuration. Five minutes spent checking would probably have meant getting some real research results over the weekend: now, nothing will probably work until Monday (and I'm not holding my breath then, either). | |

| ID: 60365 | Rating: 0 | rate:

| |

Now these are doing it as well: MCL1_m28_m47_1_FIX-QUICO_ATM_Sage_xTB-0-5-RND0954_0 Exactly! When you have more Tasks that Error (277) than Valid (240) ... that is pretty damn sad! | |

| ID: 60366 | Rating: 0 | rate:

| |

The experimenters and/or staff have got to get a grip on this - you are wasting everybody's time and electricity. + 1 | |

| ID: 60369 | Rating: 0 | rate:

| |

Got a collection of twenty-one errored tasks. Suspended work fetch on that computer. The other is busy with Abous WU. ___________ Abous, WU finished and I got one ATMBeta. It lasted all of one minute and three seconds. Suspended work fetch on this computer also. Validated two ATMBeta, error twenty-two. | |

| ID: 60370 | Rating: 0 | rate:

| |

|

Maybe someone can answer a question I have. After running ATMBeta, Einstein starts but it reports, GPU is missing. How does this happen? | |

| ID: 60371 | Rating: 0 | rate:

| |

|

atmbeta likely has nothing to do with it. | |

| ID: 60372 | Rating: 0 | rate:

| |

atmbeta likely has nothing to do with it. _____________________________ Thank you. I have just finished reinstalling Windows and now the drivers. | |

| ID: 60373 | Rating: 0 | rate:

| |

|

Clean Windows install and drivers install. | |

| ID: 60375 | Rating: 0 | rate:

| |

And a similar batch configuration error with today's BACE run, like Yes, big mess up on my end. More painful since it happened to two of the sets with more runs. I just forgot to run the script that copies the run.sh and run.bat files to the batch folders. It happened to 2/8 batches but yeah, big whoop. Apologies on that. The "fixed" runs should be sent soon. The "missing *0.xml" errors should not happen anymore too. Regarding checkpoint, at least I, cannot do much more than pass the message which I have done several times. Again, sorry for this. I can understand it to be very annoying. | |

| ID: 60376 | Rating: 0 | rate:

| |

|

Thanks for reporting back. | |

| ID: 60378 | Rating: 0 | rate:

| |

|

Extrapolated execution times for several of my currently running "BACE_" and "MCL1_" WUs are pointing to be longer than other previous batches. | |

| ID: 60379 | Rating: 0 | rate:

| |

|

Agreed. My first BASE of the current batch ran for 20 minutes per sample, compared with previous batches which ran at speeds down as low as 5 minutes per sample. It's touch and go whether they will complete within 24 hours (GTX 1660 Ti/super). | |

| ID: 60380 | Rating: 0 | rate:

| |

Extrapolated execution times for several of my currently running "BACE_" and "MCL1_" WUs are pointing to be longer than other previous batches. I am afraid that just now I am confronted with such a case: the file has size of 719 MB, and it does not upload, just backing off all the time :-( WTF is this? Did it run 15 hours on a RTX3070 just for nothing? | |

| ID: 60381 | Rating: 0 | rate:

| |

|

I'm in the same unfortunate situation with too large an upload. | |

| ID: 60382 | Rating: 0 | rate:

| |

|

Now have 15 BACE tasks backed up because server not accepting the file size. | |

| ID: 60383 | Rating: 0 | rate:

| |

|

I'd hang on to them for a day or two - it can be fixed, if the right person pulls their finger out. | |

| ID: 60384 | Rating: 0 | rate:

| |

I'd hang on to them for a day or two - it can be fixed, if the right person pulls their finger out. Although I doubt that this will happen :-( | |

| ID: 60385 | Rating: 0 | rate:

| |

|

previous instances of this problem you could abort the large upload and it will report fine and you still get credit most of the time. | |

| ID: 60386 | Rating: 0 | rate:

| |

|

I'd like to think that all that bandwidth carries something of value to the researchers - that would be the main point of it. | |

| ID: 60387 | Rating: 0 | rate:

| |

|

i thought one of the researchers said they don't need this file. | |

| ID: 60388 | Rating: 0 | rate:

| |

|

I just aborted such a completed task which then showed as ready to report. Reported it, but zero credit :-( | |

| ID: 60389 | Rating: 0 | rate:

| |

i thought one of the researchers said they don't need this file. Perhaps Quico could confirm that, since we seem to have his attention? | |

| ID: 60390 | Rating: 0 | rate:

| |

|

As some compensation for the BACE tasks, I'm seeing the MCL1 sage seasoned tasks reporting | |

| ID: 60391 | Rating: 0 | rate:

| |

|

OK, confirmed - it is still the Apache problem. Tue 09 May 2023 15:06:15 BST | GPUGRID | [http] [ID#15383] Received header from server: HTTP/1.1 413 Request Entity Too Large File (the larger of two) is 754.1 MB (Linux decimal), 719.15 MB (Boinc binary). At this end, we have two choices: 1) Abort the data transfer, as Ian suggests. 2) Wait 90 days for somebody to find the key to the server closet. Quico? | |

| ID: 60392 | Rating: 0 | rate:

| |

|

is this problem only with "BACE..." tasks, or has anyone seen it with other types of task as well? | |

| ID: 60393 | Rating: 0 | rate:

| |

|

Just BACE tasks affected. I've now aborted 14 tasks and half were credited. | |

| ID: 60395 | Rating: 0 | rate:

| |

Just BACE tasks affected. I've now aborted 14 tasks and half were credited. really strange, isn't it? What's the criterion for granting credit or not granting credit ??? I now aborted two such BACE tasks which could not upload. For one I got credit, for the other one it said "upload failure" - real junk :-((( 15 hours on a RTX3070 just for NOTHING :-((( | |

| ID: 60396 | Rating: 0 | rate:

| |

|

I just aborted my too large upload and got some credit for it. Missed the 50% bonus because of holding onto it for too long. | |

| ID: 60397 | Rating: 0 | rate:

| |

|

When I attempt to Abort two of these WUs, nothing seems to happen at all. Both tasks still show "Uploading" and the Transfers page still shows them at 0%. I have tried "Retry Now" on the Transfers page several times (each) to no avail. Should I instead "Abort Transfer"? | |

| ID: 60398 | Rating: 0 | rate:

| |

|

Yes, you want to go to the Transfers page, select the tasks that are in upload backoff and "Abort Transfer" | |

| ID: 60399 | Rating: 0 | rate:

| |

|

Way to go GPUgrid. Sure are on a roll lately with the complete utter mess ups. | |

| ID: 60400 | Rating: 0 | rate:

| |

|

during last night, two of my machines downloaded and startet "Bace" tasks (first letter in upper case, the following ones in lower case). | |

| ID: 60401 | Rating: 0 | rate:

| |

during last night, two of my machines downloaded and startet "Bace" tasks (first letter in upper case, the following ones in lower case). https://www.gpugrid.net/workunit.php?wuid=27494001 Bace Unit was successful. See above link. If you run them, watch the elapsed time and progress rate, and you will know in a few minutes, how they will go. | |

| ID: 60402 | Rating: 0 | rate:

| |