Message boards : Number crunching : Errors piling up, bad batch of NOELIA?

| Author | Message |

|---|---|

|

I have had several, nine and counting, Noelia tasks fail after only running for around 2 seconds. | |

| ID: 37808 | Rating: 0 | rate:

| |

|

Have you just done an update from Windows Update WDDM? | |

| ID: 37809 | Rating: 0 | rate:

| |

|

The same with me. Suddenly more than 30 errors. No changes in my system than updating the driver to the last version. But I have the same issue on machines where nothing has changed since weeks.. <core_client_version>7.2.42</core_client_version> <![CDATA[ <message> (unknown error) - exit code -98 (0xffffff9e) </message> <stderr_txt> # GPU [GeForce GTX 750 Ti] Platform [Windows] Rev [3301M] VERSION [60] # SWAN Device 0 : # Name : GeForce GTX 750 Ti # ECC : Disabled # Global mem : 2048MB # Capability : 5.0 # PCI ID : 0000:01:00.0 # Device clock : 1241MHz # Memory clock : 2700MHz # Memory width : 128bit # Driver version : r340_00 : 34052 ERROR: file mdioload.cpp line 162: No CHARMM parameter file specified 18:44:55 (2516): called boinc_finish </stderr_txt> ]]> ____________ Regards, Josef  | |

| ID: 37811 | Rating: 0 | rate:

| |

Have you just done an update from Windows Update WDDM? No updates of any kind on my system. Looking at my GPU Grid account it shows I have one task in progress when I have nothing in my queue for GPU Grid. I have done a project rest and rebooted the system. Still getting the same problem. Instead of wasting time and bandwidth downloading tasks that are going to fail I have set the project to no new tasks until some one comes up with an answer. | |

| ID: 37813 | Rating: 0 | rate:

| |

|

Current problem solving: Switched my preferences to ACEMD short runs (2-3 hours on fastest card). And all works fine again. Seems that the problem is caused by the long ones. | |

| ID: 37815 | Rating: 0 | rate:

| |

|

Same issue here. 16 errors and increasing. No changes to platform; platform is >50% complete processing three NOELIA_tpam2 tasks without issue. NOELIA_TRPs fail within 3 seconds with following error: | |

| ID: 37817 | Rating: 0 | rate:

| |

|

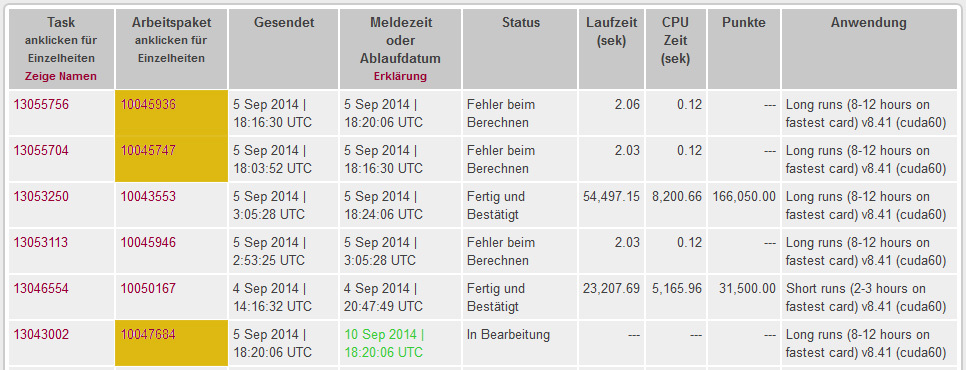

Just had two bad ones in quick succession: | |

| ID: 37819 | Rating: 0 | rate:

| |

|

Me too! | |

| ID: 37820 | Rating: 0 | rate:

| |

|

Indeed the third type of Noelia's WU's are errorsome. I have now 47 of them and wing(wo)man too. This seems be the error: file mdioload.cpp line 162: No CHARMM parameter file specified. | |

| ID: 37825 | Rating: 0 | rate:

| |

|

I'm having the same issue with NOELIA_TRP188 workunits. | |

| ID: 37826 | Rating: 0 | rate:

| |

|

Please cancel these units already, they are all erroring out. | |

| ID: 37828 | Rating: 0 | rate:

| |

|

Have these errors been resolved? | |

| ID: 37830 | Rating: 0 | rate:

| |

|

Unclear if resolved. Within the last 30 minutes, I am no longer receiving NOELIA_TRP long run tasks. All three of my platforms have received NOELIA_tpam tasks, so I have switched back to the long runs for now. | |

| ID: 37831 | Rating: 0 | rate:

| |

...I am no longer receiving NOELIA_TRP long run tasks. All three of my platforms have received NOELIA_tpam tasks, so I have switched back to the long runs for now. Me the same. Right now all seems to be fine. ____________ Regards, Josef  | |

| ID: 37832 | Rating: 0 | rate:

| |

|

Feel free to send the NOELIA_TRP WUs my way. They run fine on my 780Ti under linux. | |

| ID: 37834 | Rating: 0 | rate:

| |

|

GPUGrid Admins: I'm having the same issue with NOELIA_TRP188 workunits. That is exactly what's happening for me. The NOELIA_TRP188 work units error out just like that, for Windows machines. http://www.gpugrid.net/workunit.php?wuid=10045781 http://www.gpugrid.net/workunit.php?wuid=10045192 http://www.gpugrid.net/workunit.php?wuid=10045206 Then, BOINC immediately reports the error (per your setting), gets a network backoff for GPUGrid, then starts asking my backup projects for work. So, I get to work on backup projects until you can fix this. Can you kindly please determine the problem, and cancel the work units that have problems, and then relay to us what happened? Thanks, Jacob | |

| ID: 37837 | Rating: 0 | rate:

| |

...I am no longer receiving NOELIA_TRP long run tasks. All three of my platforms have received NOELIA_tpam tasks, so I have switched back to the long runs for now. I am still getting them, just not as frequently. Feel free to send the NOELIA_TRP WUs my way. They run fine on my 780Ti under linux. While you may think this to be true, however my linux computers have had their share of these errors, just not as frequently. FWIW, going back to Einstein@home until this is resolved. Enough is enough. | |

| ID: 37838 | Rating: 0 | rate:

| |

While you may think this to be true, however my linux computers have had their share of these errors, just not as frequently. Good point. I've only had 2 WUs so that's certainly not enough for me to conclude that these WUs are ok on my machine. | |

| ID: 37839 | Rating: 0 | rate:

| |

I am still getting them, just not as frequently. You're right. I just checked one of my PC's. Two attempts failed before the third has worked. 18:03 fail 18:16 fail 18:20 Ok  ____________ Regards, Josef  | |

| ID: 37847 | Rating: 0 | rate:

| |

|

Any up-date? Can I change back to long runs? My computers run without supervision over the week-end, so I do not like to pile up errors. | |

| ID: 37853 | Rating: 0 | rate:

| |

Any up-date? Can I change back to long runs? My computers run without supervision over the week-end, so I do not like to pile up errors. While you may still get a bad task here or there, I would venture to say the number of current bad tasks has sharply dwindled. | |

| ID: 37857 | Rating: 0 | rate:

| |

|

I have had 5 NOELIA fails since 3rd of September. The last unit was sent to my workstation 7 Sep 2014 12:59:07 UTC.

| |

| ID: 37862 | Rating: 0 | rate:

| |

|

Those which run for a long time before the error shows (the NOELIA_tpam2 workunits) are failing because they got a lot of "The simulation has become unstable. Terminating to avoid lock-up" messages before they actually fail. This kind of error is usually caused by: | |

| ID: 37863 | Rating: 0 | rate:

| |

Those which run for a long time before the error shows (the NOELIA_tpam2 workunits) are failing because they got a lot of "The simulation has become unstable. Terminating to avoid lock-up" messages before they actually fail. This kind of error is usually caused by: I disagree either of the 4 points being the issue. My Nvidia Quadro K4000 GPU is completely stock with absolutely no modifications or overclocking applied. So the frequencies are right. Also the case of my hostDell Precision T7610 is completely unmodified and the case fans are regulated automatically as always. The GPU run at 75 degree C which is well on the safe side. Further, I haven't performed a driver update for months. May I add that I haven't noticed any failed WU's on my system until now. Within 5 days 5 NOELIA WU's failed. | |

| ID: 37874 | Rating: 0 | rate:

| |

Those which run for a long time before the error shows (the NOELIA_tpam2 workunits) are failing because they got a lot of "The simulation has become unstable. Terminating to avoid lock-up" messages before they actually fail. This kind of error is usually caused by: Of course there are more possibilities, but these 4 points are the most frequent ones, also these could be checked easily by tuning the card with software tools (like MSI Afterburner). Furthermore these errors could be caused by a faulty (or inadequate) power supply, and the aging of the components (especially the GPU). These are much harder to fix, but you can still have a stable system with these components if you reduce the GPU/GDDR5 frequency. It's better to have a 10% slower system than a system producing (more and more frequent) random errors. My Nvidia Quadro K4000 GPU is completely stock with absolutely no modifications or overclocking applied. So the frequencies are right. The statement in the second sentence is not in consequence of the first sentence. The frequencies (for the given system) are right when there are no errors. The GPUGrid client pushes the card very hard, like the infamous FurMark GPU test, so we had a lot of surprises over the years (regarding stock frequencies). Also the case of my host Dell Precision T7610 is completely unmodified and the case fans are regulated automatically as always. The GPU run at 75 degree C which is well on the safe side. Further, I haven't performed a driver update for months. It is really strange, that a card could have errors even below 80°C. I have two GTX 780Ti's in the same system, one of them is an NVidia standard design, the other is an OC model (BTW both of them Gigabyte). I had errors with the OC model right from the start while its temperature was under 70°C (only with GPUGrid, no other testing tools showed any errors), but reducing its GDDR5 frequency from 3500MHz to 2700MHz (!) solved my problem. After a BIOS update this card is running error free at 2900MHz, but it's still way below the factory setting. May I add that I haven't noticed any failed WU's on my system until now. Within 5 days 5 NOELIA WU's failed. If you check the logs of your successful tasks, those also have this "The simulation has become unstable. Terminating to avoid lock-up" messages, so you were lucky that those workunits were successful. If you check my (similar NOELIA) workunits, none of them has these messages. So, give it a try to reduce the GPU frequency (its harder to reduce the GDDR5 frequency, as you have to flash the GPU's BIOS). | |

| ID: 37875 | Rating: 0 | rate:

| |

|

The direct crashes should be fixed now. | |

| ID: 37881 | Rating: 0 | rate:

| |

|

The Noelia's doing okay on my systems after last week hick up but now the latest Santi's, (final) have this error: ERROR: file mdioload.cpp line 81: Unable to read bincoordfile. | |

| ID: 37888 | Rating: 0 | rate:

| |

Message boards : Number crunching : Errors piling up, bad batch of NOELIA?