Message boards : Graphics cards (GPUs) : New driver for nvidia

| Author | Message |

|---|---|

|

There is a new driver available for nvidia Gpu`s named 334.89 at this day. | |

| ID: 35121 | Rating: 0 | rate:

| |

|

thx :-) | |

| ID: 35123 | Rating: 0 | rate:

| |

|

My first impression is that 334.89 (on Win7) is similar to its Beta predecessor. | |

| ID: 35154 | Rating: 0 | rate:

| |

|

Just updated to 334.89 from 332.21. I'm now seeing that ACEMD tasks are no longer using a full core each. Is this normal? | |

| ID: 35282 | Rating: 0 | rate:

| |

Just updated to 334.89 from 332.21. I'm now seeing that ACEMD tasks are no longer using a full core each. Is this normal? Yes, that's as expected with 334. Might see a minimal drop in performance for some WU, but offset that with greatly reduced CPU load. Matt | |

| ID: 35284 | Rating: 0 | rate:

| |

|

Excellent. Thank you for the quick reply. | |

| ID: 35285 | Rating: 0 | rate:

| |

|

I like the 334.89 driver for my 680/460 XP machines. Just a couple percent lower GPU utilization (dropped from 97/99% down to 95%/98%). But a whole lot lower CPU use for the 680. The 460 was already low to begin with. | |

| ID: 35289 | Rating: 0 | rate:

| |

|

Thanks for this useful information Jeremy. I have downloaded the latest driver and was planning in installing, but reading your post I won't. My 780Ti is already in low GPU use, I like it higher not lower. Tried all your settings but the card will not boost, despite 70-72°C. | |

| ID: 35295 | Rating: 0 | rate:

| |

|

Good to know about the 780Ti. I'm about to switch my 680s out for two of those. | |

| ID: 35305 | Rating: 0 | rate:

| |

Good to know about the 780Ti. I'm about to switch my 680s out for two of those. My 780Ti is still fast, twice as fast as my 660 but it can be faster if compared with Jeremy results, same OS as I have, or with XP or Linux. I will switch my two 660 for one 790 or a 780Ti. ____________ Greetings from TJ | |

| ID: 35318 | Rating: 0 | rate:

| |

|

Matt, if this new driver causes such a performance hit on faster cards I can think of two things to do: | |

| ID: 35350 | Rating: 0 | rate:

| |

|

With the same or similar CPU usage settings (75% in my case)I find no issues with the 334.89 driver. On my W7 system the apps are either the same speed or slightly faster. GTX670 and GTX770's only though (no GK110 cards). So perhaps it's just an issue on GK110 cards? I would want to see it tested by several others, and hear about the Boinc settings, before announcing it as a bad-egg (and then only for Win7 and GK110). | |

| ID: 35357 | Rating: 0 | rate:

| |

|

I haven't noticed any difference on my Windows 7 computer with EVGA 780TI SC | |

| ID: 35358 | Rating: 0 | rate:

| |

With the same or similar CPU usage settings (75% in my case)I find no issues with the 334.89 driver. On my W7 system the apps are either the same speed or slightly faster. GTX670 and GTX770's only though (no GK110 cards). So perhaps it's just an issue on GK110 cards? I would want to see it tested by several others, and hear about the Boinc settings, before announcing it as a bad-egg (and then only for Win7 and GK110). I'm also running at 75% CPU usage with my 680s but will be upgrading to 780Tis either today or tomorrow. I'll report afterward and let you know what I find. I haven't noticed any difference in speed with the 680s running 334.89. ____________ | |

| ID: 35359 | Rating: 0 | rate:

| |

|

On a GT640 DDR3 I'm seeing a SR runtime increase from 34440s to 36270s, i.e. a 5% throughput reduction. I switched from WHQL 327 to 334.89. CPU usage is set to 100%, but actually running at about 7 of 8 cores loaded due to my app_configs. | |

| ID: 35362 | Rating: 0 | rate:

| |

|

I recently updated my GTX 650Ti drivers to 320.57. I am curious and would like an explanation as to why my tasks are all CUDA 4.4 while everyone else appears to be processing CUDA 5.5 tasks. The CPU utilization and Run Time for all my tasks are too high. Can anyone help? | |

| ID: 35363 | Rating: 0 | rate:

| |

|

CUDA 5.5 work is distributed from a higher driver version onwards, if I remember correctly. The older ones support it officially, but it didn't work properly. The WHQL 327 or 332 driver should be fine, 334 as discussed here. For optimal runtimes your CPU should not run at 100% load. | |

| ID: 35370 | Rating: 0 | rate:

| |

|

Thanks for the information: I will install new drivers shortly. CUDA 5.5 work is distributed from a higher driver version onwards, if I remember correctly. The older ones support it officially, but it didn't work properly. The WHQL 327 or 332 driver should be fine, 334 as discussed here. For optimal runtimes your CPU should not run at 100% load. | |

| ID: 35377 | Rating: 0 | rate:

| |

|

The Windows app versions are 8.15 (cuda42) and 8.15 (cuda55). | |

| ID: 35381 | Rating: 0 | rate:

| |

|

Just got my 780Tis in and running on 334.89. One is running SANTI_MAR at 71 - 73% utilization and the other is running NOELIA_FXA averaging around 79% utilization. | |

| ID: 35396 | Rating: 0 | rate:

| |

|

I'm using driver 331.82 and have ~76% GPU use on the 780Ti. Run times are around 24000 seconds but varies from WU to WU. With XP or Linux times go under 20000 seconds, but have not tried that myself. But the card is faster then a GTX680, even with Win7-8-8.1 | |

| ID: 35403 | Rating: 0 | rate:

| |

|

Thanks. It looks like we're having similar problems with this card. I've posted a couple times on the "Poor times with 780 Ti" thread. | |

| ID: 35404 | Rating: 0 | rate:

| |

|

Matt, | |

| ID: 35405 | Rating: 0 | rate:

| |

|

Jeremy, | |

| ID: 35406 | Rating: 0 | rate:

| |

|

Got to it a bit early. | |

| ID: 35407 | Rating: 0 | rate:

| |

|

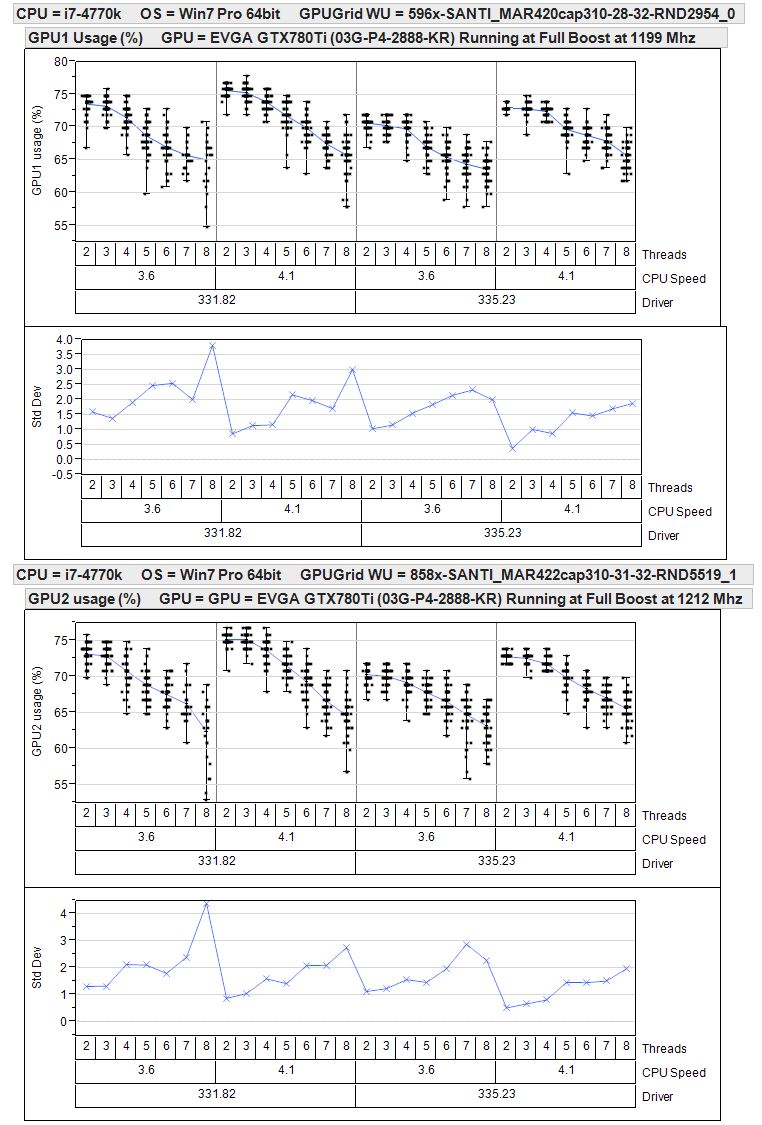

Driver is 331.82 and OS is Win7 64. Have a Santi running on each 780Ti card. Both cards holding full boost (1187 and 1200). EVGA Precision says utilization is ~75 with low variation (jumping 72-77%). | |

| ID: 35408 | Rating: 0 | rate:

| |

Not sure what is happening between reboots. When you stop crunching to reboot the GPU cools off. When it's rebooted and crunching again the temperature rises to the temp where the card downclocks to try to keep its temperature down. If you have adequate cooling the temperature will decrease and when it drops below the "magic temp" the clocks boost. If you don't have adequate cooling then the temp will not drop and the clocks will stay at the low speed until you quit crunching and reboot at which time the GPU cools off. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 35411 | Rating: 0 | rate:

| |

Just got my 780Tis in and running on 334.89. One is running SANTI_MAR at 71 - 73% utilization and the other is running NOELIA_FXA averaging around 79% utilization. On my W7 rig, running 4 CPU tasks I got ~80% utilization for a SANTI_MAR and ~85% utilization for a NOELIA_FXA on high end GK104 cards. However I noticed that the tasks utilization drifted a bit during the run. At times it was as high as 87% for the NIELIA_FXA and 82% for the SANTI_MAR, but it was also a bit lower (79 & 83). By varying CPU usage from 50% to 75% I do see some changes, 3 to 5% improvement at a 50% CPU setting in Boinc. With higher cards it's likely that the WDDM introduces a larger overhead; it's being asked to do more/time. I see it as a bottleneck somewhat akin to the bus issues on a few cards (192bits causes some performance loss with the GTX660Ti, albeit along with cache size) - applied to larger cards it's worse. While you can tweak a GTX660Ti to optimize for performance/Watt, there is little that can be done to prevent the WDDM from interfering AFAIAA. Jeremy, stop running CPU tasks and see what happens. Either you've found a bug in the driver or its because the CPU is being used. People should try to remember that CPU usage can hinder GPU tasks because the GPU's still need to use the GPU's. The interference varies by CPU application and by GPUGrid task type. Having a web page with video on it constantly refreshing will reduce performance as will screen-savers... ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 35414 | Rating: 0 | rate:

| |

|

I have some data on my 780Ti to share. | |

| ID: 35415 | Rating: 0 | rate:

| |

|

Mt GTX780Ti runs at 70°C at the moment and that is cool for this card, but it runs at 875.7MHz steady, GPU use ate 76% on Win7 5 threads do Rosetta the other 3 are to use by the GPU and other programs. | |

| ID: 35416 | Rating: 0 | rate:

| |

When you stop crunching to reboot the GPU cools off. When it's rebooted and crunching again the temperature rises to the temp where the card downclocks to try to keep its temperature down. If you have adequate cooling the temperature will decrease and when it drops below the "magic temp" the clocks boost. If you don't have adequate cooling then the temp will not drop and the clocks will stay at the low speed until you quit crunching and reboot at which time the GPU cools off. Dagorath, All of these utilizations were right after reboot. The temps were ~45 right at the start. Only let the system run for a couple minutes. The below WU finished last night and you can see all the reboots, driver changes, and the temperature ranges during the testing. The only thing I am trying to understand is why would utilization jump around so much after reboots. It seems "sticky". What ever the reboot sets, is where it stays. Of course I did not let it runs hours and/or finish a WU to see if is stayed at the low utilization. The utilizations that I had reported did not change or have any slope to them while I let the system run for the few minutes. Simply once BOINC started processing (after 30 sec delay), utilization goes from 0 to the number reported and just stayed flat with variation. http://www.gpugrid.net/result.php?resultid=7844175 Jeremy, stop running CPU tasks and see what happens. Either you've found a bug in the driver or its because the CPU is being used. People should try to remember that CPU usage can hinder GPU tasks because the GPU's still need to use the GPU's. The interference varies by CPU application and by GPUGrid task type. Having a web page with video on it constantly refreshing will reduce performance as will screen-savers... skgiven, before and after the series of reboots, I tried from 0 to 5 extra CPU tasks (not counting the 2 threads for the GPUGrid tasks), but I could not swing the needle noticeably on the utilization. I am talking as much as a 30% drop in GPU utilization after a reboot, not the single digit differences (if I remember correctly) that you had discussed. Also, it is from reboot to reboot. I will try the reboot series again with 0 extra cpu tasks to isolate it out just in case.[/quote] | |

| ID: 35419 | Rating: 0 | rate:

| |

|

In the past there were issues with Prefer Maximum Performance selection, and I think they still exist in XP (you can't set it). It's likely there is an issue again for some GTX780Ti cards. If that's not the case perhaps the clocks/boost are not behaving normally. Are you using a start-up delay? | |

| ID: 35433 | Rating: 0 | rate:

| |

|

Reading these posts an interesting idea approached me, regarding the lower GPU utilization when set to "maximum performance". | |

| ID: 35437 | Rating: 0 | rate:

| |

In the past there were issues with Prefer Maximum Performance selection, and I think they still exist in XP (you can't set it). It's likely there is an issue again for some GTX780Ti cards. If that's not the case perhaps the clocks/boost are not behaving normally. Are you using a start-up delay? I have not a start delay and have recently update the MOBO BIOS as the USB 3.0 was not working with the Asus factory one. Indeed Jeremy and I have same CPU (4770-4771), same OS and same settings. Two differences: I have also a GTX770 in it both from Asus. Jeremy has EVGA. ____________ Greetings from TJ | |

| ID: 35440 | Rating: 0 | rate:

| |

Two differences: I have also a GTX770 in it both from Asus. Jeremy has EVGA. The card manufacturer normally doesn't matter, it'S the same chip and drivers anyway. Your 2nd card will force both of your cards into PCI3 8x, but this has not mattered much before at GPU-Grid, so I doubt it contributes significantly to any runtime differences. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 35446 | Rating: 0 | rate:

| |

|

I noticed my GTX770 was operating at 1045MHz today (334.89 driver) and 49% GPU Power Usage. When I opened up NVidia Control Panel and went to 3D settings, Power Management Mode was set to Adaptive. I changed this to Prefer Maximum Performance and in MSI Afterburner increased the clock by 78MHz and power limit by 5%. This made no difference to the frequency until I restarted the system. With previous drivers, the changes were immediate. Prior to installing 334.89 I had the Prefer Maximum Performance selected. | |

| ID: 35463 | Rating: 0 | rate:

| |

I'm going back to 332.21 and I suggest others do the same if the clocks drop for you too. I've come to the same conclusion over the last couple days. I tested 334.89 and 331.82 several times under different conditions (Adaptive vs Max Performance, reboot vs non-reboot between setting changes, clean install vs normal upgrade in GeForce Experience). Finally came back to 332.21 and performance has been rock-solid since. Both cards are at full boost and seem to be staying that way from WU to WU and my temps are even a couple degrees lower compared to 331.82 at the same GPU load. I really liked being able to run extra CPU tasks with 334.89, but it doesn't seem to give reliable performance with the 780Ti. It worked very well with my 680s, so I may use it with those when I get them back into a crunching box. Of course, now that I've posted this I'm fully expecting things to start acting funny again... ____________ | |

| ID: 35485 | Rating: 0 | rate:

| |

|

Hi Matt, I see your times of the 780Ti and am impressed. I have also Win7 but my times are 24000-27000 seconds. The cards runs ate 878.7MHz, Memery clock at 1749.6MHz and 69-70°C (GPU-Z). I run only 5 tasks on my CPU, 2 for GPU and 1 free. | |

| ID: 35498 | Rating: 0 | rate:

| |

Hi Matt, I see your times of the 780Ti and am impressed. I have also Win7 but my times are 24000-27000 seconds. The cards runs ate 878.7MHz, Memery clock at 1749.6MHz and 69-70°C (GPU-Z). I run only 5 tasks on my CPU, 2 for GPU and 1 free. I'm afraid there isn't much I can tell you. The cards' base clock is 980MHz and when they're at full boost, one runs at 1124MHz and the other at 1137Mhz. I've never had them downclock below their base speed. The base memory on the cards is 7000Mhz but I run in SLI for gaming so the reported memory speed per card is 3500MHz. The cards are typically staying in the 67C - 72C range depending on how warm the room is. I'm currently running CPU at 50% which translates to 3 CPU tasks when also running GPUGrid on 332.21 but I can run at least one more without affecting GPU performance. The only setting I've changed is "Prefer Maximum Performance" vs the "Adaptive" default power usage in the Nvidia Control Panel. Jacob Klein started a new thread on maintaining max boost on Kepler cards which looks promising. I've not implemented the technique yet, but I may soon if my performance doesn't stabilize more to my liking. ____________ | |

| ID: 35499 | Rating: 0 | rate:

| |

|

I have also set nVidia 3D to Prefer Maximum Performance as that is the advise here, but even when run a few days on Adaptive it doesn't matter I have tried. | |

| ID: 35500 | Rating: 0 | rate:

| |

|

I run Precision X but mostly just for monitoring the cards. I've tried using it in the past to overclock my GTX 680s, but that tends to cause tasks to fail on this project. | |

| ID: 35501 | Rating: 0 | rate:

| |

I'm going back to 332.21 and I suggest others do the same if the clocks drop for you too. I concur with both (especially after having to revert kernel to get old driver and the first WU failed). Thanks for the all the info! On my Linux system (GTX 780) I found that while the new driver (or beta) dramatically dropped CPU usage there was a sufficient increase in times and my credit rate suffered. The difference between reporting times of 2 adjacent WUs with different drivers was 4 hours! The downside is that the system with the old driver is louder than new ones but I don't have the tools in place to see what is going on. Under old driver: http://www.gpugrid.net/workunit.php?wuid=5202339 536x-SANTI_MARwtcap310-12-32-RND6877_0 Run time 22,237.18 CPU time 22,016.17 Credit 115,650.00 Under the new driver: http://www.gpugrid.net/workunit.php?wuid=5222500 579x-SANTI_MAR422cap310-15-32-RND3745_0 Run time 35,427.61 CPU time 3,789.13 Credit 115,650.00 | |

| ID: 35502 | Rating: 0 | rate:

| |

|

Exactly the same result I have too. worsened's about four hours, the card gtx titanium and also the gtx 680.On the gtx Titan time I have run about 29,000 seconds, which is very wrong. | |

| ID: 35503 | Rating: 0 | rate:

| |

|

335.23 WHQL released today ... I'll post back later on Win7 CPU usage | |

| ID: 35582 | Rating: 0 | rate:

| |

335.23 WHQL released today ... I'll post back later on Win7 CPU usage Thanks for the heads up! I have confirmed that, on Windows 8.1 x64, 335.23 uses the same "low CPU usage" on my Kepler device, as the 334.89 and 334.67 drivers. Also, I'm doing testing on whether it will fall back from Max Boost for reason "Util" when the load doesn't meet a certain threshold. I suspect it will fall back. Therefor, I recommend using the following 2 posts to "Force Max Boost" on any Kepler system: http://www.gpugrid.net/forum_thread.php?id=3647&nowrap=true#35410 http://www.gpugrid.net/forum_thread.php?id=3647&nowrap=true#35562 Edit: Yup, I confirmed that the behavior where it can downclock from Max Boost and stay downclocked, still exists. I completed a task, there was a brief 15 second pause between tasks (so it downclocked to 3D Base Mhz), then a new task started. Even at solid 82-84% GPU Usage, the GPU is not boosting back up at all. It's probably not considered a bug by NVIDIA, since in their eyes, there's not enough demand on the GPU to warrant boosting. So... I recommend forcing Max Boost, per the links I posted. Regards, Jacob | |

| ID: 35584 | Rating: 0 | rate:

| |

|

I didn't read the other thread yet.. does the performance with these newer drivers still degrade when you force max clocks? Because on my GT640 GPU load is a constant 99% (-> max boost with any driver) and yet I was seeing a severe performance drop with the previous WHQL. | |

| ID: 35599 | Rating: 0 | rate:

| |

|

The GT640 doesn't Boost, so it shouldn't be impacted the same way. | |

| ID: 35605 | Rating: 0 | rate:

| |

335.23 WHQL released today ... I'll post back later on Win7 CPU usage Have you tried playing a game pausing it, then resuming it and seeing if the same thing happens? Because if it does then that WOULD be a major problem for all role playing gamers and Nvidia would be interested in fixing it. If it is only Boinc then maybe the Boinc programmers need to learn how to make their software trigger the speed back up feature like the games do. If on the other hand the card never slows down when the game is paused then that is a whole other kettle of fish. | |

| ID: 35607 | Rating: 0 | rate:

| |

|

skgiven: | |

| ID: 35609 | Rating: 0 | rate:

| |

The GT640 doesn't Boost, so it shouldn't be impacted the same way. Right. That's why I asked whether you guys also see a performance drop even after you fix the boost clock. Perhaps using less CPU for the GPUGrid WU's is in some way to blame. Yes, that's the most obvious explanation. Maybe you ran more CPU tasks (as an extra CPU core was free), or found that the Bus became more bottlenecked than before (GPU task related). Sometimes what's running on the CPU can greatly impact upon the GPU performance (some CPU apps run at higher priorities than others), and then there is the HD Graphics 4000 - if you ran Einstein (or other iGPU tasks) there is a chance that started gobbling up all the resources. I ran one more CPU task, saw the result, then reverted back to the same CPU load as before (80-90%) and still didn't see performance where it used to be. In fact, there was next to no difference between +1 and +0 CPU tasks if I remember correctly. The HD4000 ran Einstein tasks just as it did before (and no performance change here either). The mix of other CPU projects didn't change either. And why would the bus suddenly become a bottleneck when the GPU is doing less work? The GT640 is a special card in the way that it's more memory bandwidth-limited than any other current card. It could be that nVidia changed some functions so that they require more bandwidth, which wouldn't impact cards not yet limited. But memory controller utilization did not really change either (didn't check for a few % difference). Or it could be that the new sync'ing mechanism between CPU and GPU just costs some performance and you guys should also see it when you fix that boost clock. If this is true I'd expect the impact to increase with GPU speed. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 35613 | Rating: 0 | rate:

| |

skgiven: I have suspended several times for varying amounts of time (few minutes to a few hours) and my clocks go back up to my OC values, now 1254 and 1202MHz. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 35619 | Rating: 0 | rate:

| |

|

Well, thanks for testing. I wish I knew what my particular problem is. It's most obvious, for me, when a GPUGrid task completes. Due to my BOINC settings, it then begins downloading the new task, so there is a "lull" where there's no GPU activity, and then a couple minutes later, the new task kicks in, often not at Max Boost. | |

| ID: 35620 | Rating: 0 | rate:

| |

|

MrS, I found that the last 2 drivers were slightly faster on my W7 system with 2 GPU's than the 331.40 drivers, without changing any Boinc settings (use 75% of the CPU's). I'm not using the iGPU to crunch. | |

| ID: 35622 | Rating: 0 | rate:

| |

|

Actually I tried the 335 yesterday and have completed 1.8 WUs since then - at normal crunching times, approximately within the margin of error! 34000 - 35000s per short task, whereas 334 WHQL landed at over 36000s. It could be that they fixed whatever was happening last time or that something just went wrong with my system last time... although that test lasted several days & WUs, with different settings. | |

| ID: 35629 | Rating: 0 | rate:

| |

|

If there isn't Gold at the end of a Rainbow, we can always hope there is... | |

| ID: 35633 | Rating: 0 | rate:

| |

|

I stand corrected - I don't see any performance drop any more with 335 WHQL on 2 hosts (GT640 and GTX660Ti)! The Boost state is a TDP-limited ~1.10 GHz at ~1.07 V just as before. | |

| ID: 35713 | Rating: 0 | rate:

| |

|

Finally had some time to work on testing GPU Utilization under a few different situations tonight. | |

| ID: 35730 | Rating: 0 | rate:

| |

Message boards : Graphics cards (GPUs) : New driver for nvidia